publications

publications by categories in reversed chronological order. * means the corresponding author.

2026

-

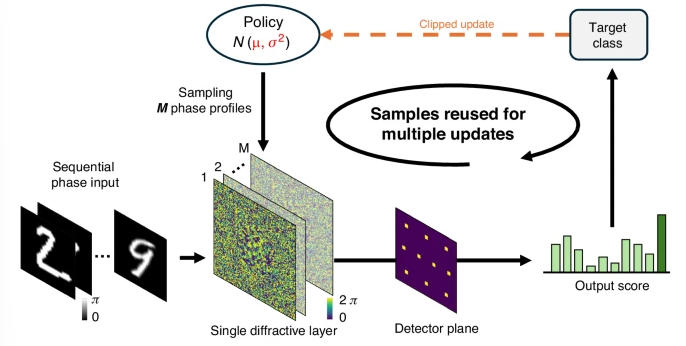

Model-free optical processors using in situ reinforcement learning with proximal policy optimizationYuhang Li, Shiqi Chen, Tingyu Gong, and 1 more authorLight: Science & Applications, 2026

Model-free optical processors using in situ reinforcement learning with proximal policy optimizationYuhang Li, Shiqi Chen, Tingyu Gong, and 1 more authorLight: Science & Applications, 2026@article{li2026model, title = {Model-free optical processors using in situ reinforcement learning with proximal policy optimization}, author = {Li, Yuhang and Chen, Shiqi and Gong, Tingyu and Ozcan, Aydogan}, journal = {Light: Science \& Applications}, volume = {15}, number = {1}, pages = {32}, year = {2026}, publisher = {Nature Publishing Group UK London}, url = {https://www.nature.com/articles/s41377-025-02148-7}, }

2025

-

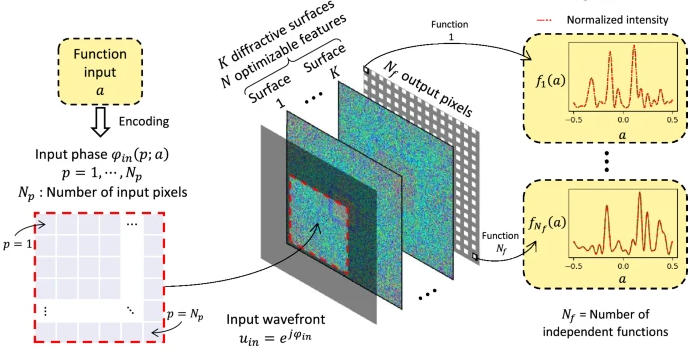

Massively parallel and universal approximation of nonlinear functions using diffractive processorseLight, Nov 2025

Massively parallel and universal approximation of nonlinear functions using diffractive processorseLight, Nov 2025@article{SadmaneLight2025, author = {}, title = {Massively parallel and universal approximation of nonlinear functions using diffractive processors}, journal = {eLight}, year = {2025}, month = nov, volume = {5}, pages = {32}, publisher = {Springer}, doi = {10.1186/s43593-025-00113-w}, url = {https://link.springer.com/article/10.1186/s43593-025-00113-w}, } -

Optical generative modelsShiqi Chen, Yuhang Li, Yuntian Wang, and 2 more authorsNature, Aug 2025

Optical generative modelsShiqi Chen, Yuhang Li, Yuntian Wang, and 2 more authorsNature, Aug 2025@article{ChenNature2025, author = {Chen, Shiqi and Li, Yuhang and Wang, Yuntian and Chen, Hanlong and Ozcan, Aydogan}, title = {Optical generative models}, journal = {Nature}, year = {2025}, month = aug, volume = {644}, pages = {903--911}, publisher = {Springer Nature}, doi = {10.1038/s41586-025-09446-5}, url = {https://www.nature.com/articles/s41586-025-09446-5}, issn = {0028-0836}, } -

Diffractive snapshot spectral imaging needs long-range dependencyZhengyue Zhuge, Chi Zhang, Jiahui Xu, and 3 more authorsOptics & Laser Technology, Aug 2025

Diffractive snapshot spectral imaging needs long-range dependencyZhengyue Zhuge, Chi Zhang, Jiahui Xu, and 3 more authorsOptics & Laser Technology, Aug 2025Hyperspectral imaging systems are valuable for various applications, but conventional spectral imaging systems often rely on time-consuming scanning mechanisms, hindering real-time imaging capabilities. Recently, diffractive spectral snapshot imaging (DSSI) systems have enabled compact and lightweight approach to capture spectral information from dynamic scenes. However, dedicated research on reconstructing DSSI-encoded images is still limited. Existing methods often adopt models originally designed for recovering hyperspectral images from clean RGB inputs, which are not fully suitable for DSSI systems. In this paper, we analyze the characteristics of DSSI systems and highlight the need for modeling long-range dependencies. By leveraging a novel state space model, we can capture these dependencies with relatively low computational cost. We further introduce a local-enhanced branch to the original vision state space module and build a local-enhanced long-range dependency block. Additionally, we propose a data selection strategy to improve optimization stability and reconstruction performance. Our approach achieves state-of-the-art performance on benchmark datasets and demonstrates superior reconstruction quality in real-world captured data.

@article{ZHUGE2025113638, title = {Diffractive snapshot spectral imaging needs long-range dependency}, journal = {Optics & Laser Technology}, volume = {192}, pages = {113638}, year = {2025}, issn = {0030-3992}, doi = {https://doi.org/10.1016/j.optlastec.2025.113638}, url = {https://www.sciencedirect.com/science/article/pii/S0030399225012290}, author = {Zhuge, Zhengyue and Zhang, Chi and Xu, Jiahui and Li, Qi and Chen, Shiqi and Chen, Yueting}, keywords = {Diffractive snapshot spectral imaging, Hyperspectral image reconstruction, Computational imaging}, } -

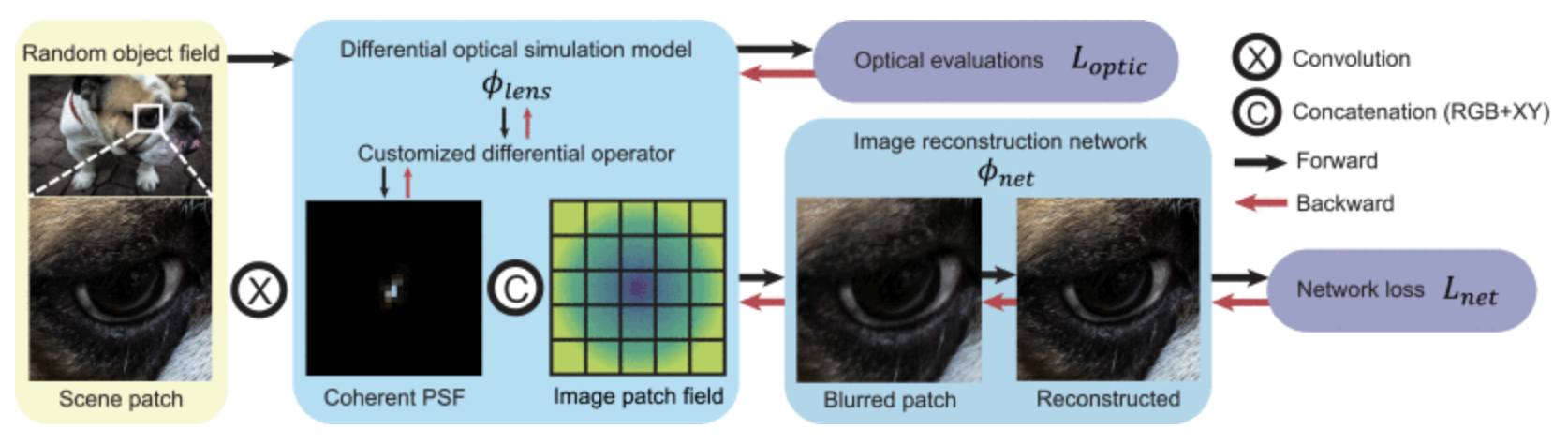

Successive Optimization of Optics and Post-Processing With Differentiable Coherent PSF Operator and Field InformationZheng Ren, Jingwen Zhou, Wenguan Zhang, and 4 more authorsIEEE Transactions on Computational Imaging, Aug 2025

Successive Optimization of Optics and Post-Processing With Differentiable Coherent PSF Operator and Field InformationZheng Ren, Jingwen Zhou, Wenguan Zhang, and 4 more authorsIEEE Transactions on Computational Imaging, Aug 2025@article{10976391, author = {Ren, Zheng and Zhou, Jingwen and Zhang, Wenguan and Yan, Jiapu and Chen, Bingkun and Feng, Huajun and Chen*, Shiqi}, journal = {IEEE Transactions on Computational Imaging}, title = {Successive Optimization of Optics and Post-Processing With Differentiable Coherent PSF Operator and Field Information}, year = {2025}, volume = {11}, number = {}, pages = {599-608}, keywords = {Optical diffraction;Optical imaging;Adaptive optics;Lenses;Optimization;Optical refraction;Optical design;Optical surface waves;Apertures;Ray tracing;Joint lens design;differentiable optical simulation;memory-efficient backpropagation;image reconstruction}, doi = {10.1109/TCI.2025.3564173}, } -

Isotropic PSF modification for optimized optical lens design in image-matching tasksBingkun Chen, Zheng Ren, Jingwen Zhou, and 5 more authorsOpt. Express, Mar 2025

Isotropic PSF modification for optimized optical lens design in image-matching tasksBingkun Chen, Zheng Ren, Jingwen Zhou, and 5 more authorsOpt. Express, Mar 2025Image matching is widely used in many practical applications, such as high-precision map reconstruction, UAV localization, and autonomous driving. Since the optical lens directly influences the captured image, which in turn affects image-matching performance, designing optical lenses that are better suited for image-matching tasks under similar budget or size constraints is a highly valuable and practical problem. In this paper, based on the differentiable optics framework, we propose modifying the point spread function (PSF) of the optical lens to be isotropic across different fields of view (FoV) by introducing intuitive PSF-based loss functions. This modification benefits feature detection and description performance. Experimental results show that our method significantly improves the feature-matching performance of the given optical design, even though it does not achieve the highest image quality.

@article{Chen:25, author = {Chen, Bingkun and Ren, Zheng and Zhou, Jingwen and Li, Haoying and Xu, Hao and Xu, Nan and Feng, Huajun and Chen*, Shiqi}, journal = {Opt. Express}, keywords = {Image metrics; Lens design; Nonlinear optics applications; Optical systems; Optical transfer functions; Systems design}, number = {5}, pages = {10538--10554}, publisher = {Optica Publishing Group}, title = {Isotropic PSF modification for optimized optical lens design in image-matching tasks}, volume = {33}, month = mar, year = {2025}, doi = {10.1364/OE.554059}, } -

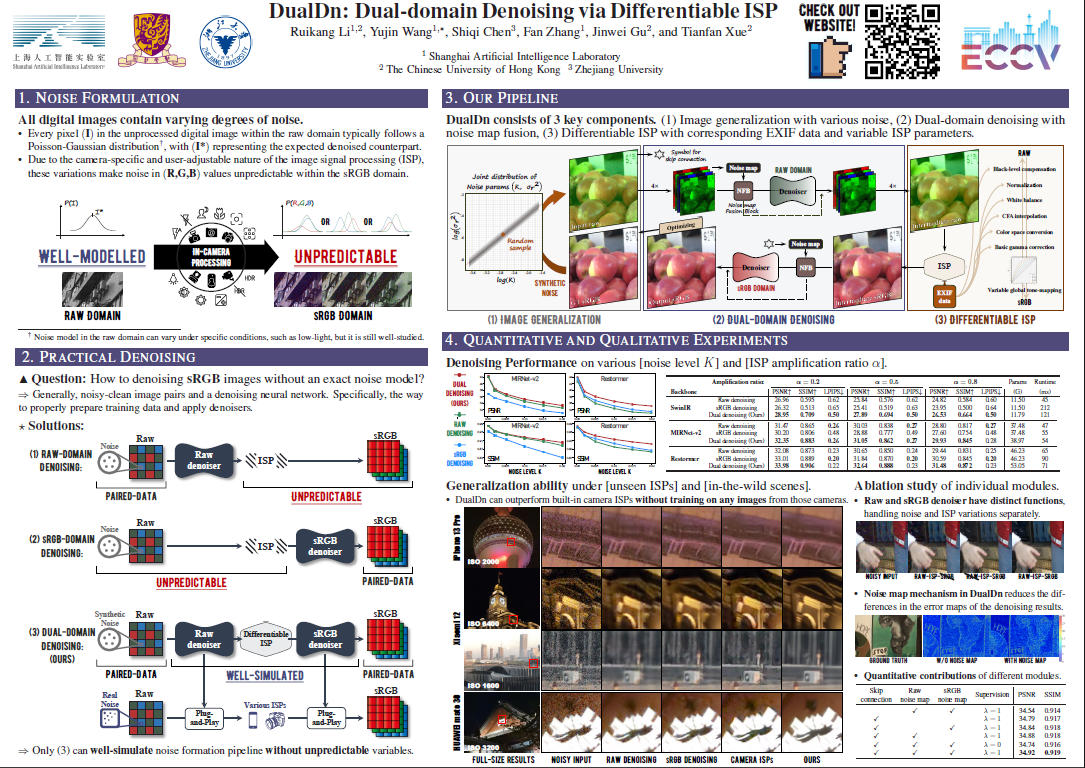

Dualdn: Dual-domain denoising via differentiable ispRuikang Li, Yujin Wang, Shiqi Chen, and 3 more authorsIn European Conference on Computer Vision, Mar 2025

Dualdn: Dual-domain denoising via differentiable ispRuikang Li, Yujin Wang, Shiqi Chen, and 3 more authorsIn European Conference on Computer Vision, Mar 2025Image denoising is a critical component in a camera’s Image Signal Processing (ISP) pipeline. There are two typical ways to inject a denoiser into the ISP pipeline: applying a denoiser directly to captured raw frames (raw domain) or to the ISP’s output sRGB images (sRGB domain). However, both approaches have their limitations. Residual noise from raw-domain denoising can be amplified by the subsequent ISP processing, and the sRGB domain struggles to handle spatially varying noise since it only sees noise distorted by the ISP. Consequently, most raw or sRGB domain denoising works only for specific noise distributions and ISP configurations. To address these challenges, we propose DualDn, a novel learning-based dual-domain denoising. Unlike previous single-domain denoising, DualDn consists of two denoising networks: one in the raw domain and one in the sRGB domain. The raw domain denoising adapts to sensor-specific noise as well as spatially varying noise levels, while the sRGB domain denoising adapts to ISP variations and removes residual noise amplified by the ISP. Both denoising networks are connected with a differentiable ISP, which is trained end-to-end and discarded during the inference stage. With this design, DualDn achieves greater generalizability compared to most learning-based denoising methods, as it can adapt to different unseen noises, ISP parameters, and even novel ISP pipelines. Experiments show that DualDn achieves state-of-the-art performance and can adapt to different denoising architectures. Moreover, DualDn can be used as a plug-and-play denoising module with real cameras without retraining, and still demonstrate better performance than commercial on-camera denoising. The project website is available at: https://openimaginglab.github.io/DualDn/.

@inproceedings{Ruikang2024, title = {Dualdn: Dual-domain denoising via differentiable isp}, author = {Li, Ruikang and Wang, Yujin and Chen, Shiqi and Zhang, Fan and Gu, Jinwei and Xue, Tianfan}, booktitle = {European Conference on Computer Vision}, pages = {160--177}, year = {2025}, organization = {Springer}, }

2024

-

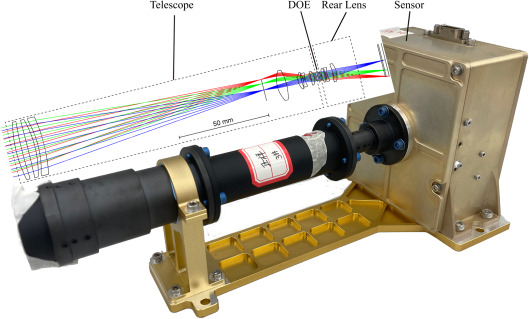

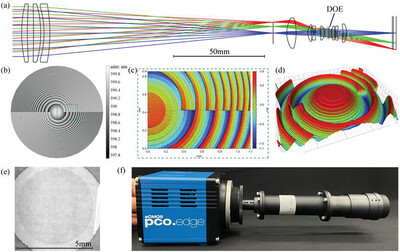

Video-Rate Spectral Imaging Based on Diffractive-Refractive Hybrid OpticsHao Xu, Haiquan Hu, Nan Xu, and 7 more authorsLaser & Photonics Reviews, Mar 2024

Video-Rate Spectral Imaging Based on Diffractive-Refractive Hybrid OpticsHao Xu, Haiquan Hu, Nan Xu, and 7 more authorsLaser & Photonics Reviews, Mar 2024Abstract With the advancement of computational imaging, a large number of spectral imaging systems based on encoding-decoding have emerged, among which phase-encoding spectral imaging systems have attracted widespread interest. Conventional phase-encoding systems suffer from severe image degradation and limited light throughput. To address these challenges and achieve video-rate spectral imaging with high spatial resolution and spectral accuracy, a novel optical system based on diffractive-refractive hybrid optics is proposed. Here, a diffractive optical element is employed to perform imaging and dispersion functions, while a rear lens is used to shorten the system’s back focal length and reduce the size of the point spread function. Meanwhile, convolutional neural network-based spectral reconstruction algorithms are employed to reconstruct the spectral data cubes from diffraction blurred images. A compact, cost-effective, and portable prototype has been constructed, demonstrating the capability to acquire and reconstruct 30 spectral data cubes per second, each with dimensions of {1080 \times 1280 \times 43 in the spectral range of 480–900 nm with a 10 nm spectral interval. The optical system has the potential to broaden the application scope of phase-encoding spectral imaging systems in various scenarios.

@article{xuhaolpr, author = {Xu, Hao and Hu, Haiquan and Xu, Nan and Chen, Bingkun and Luo, Peng and Jiang, Tingting and Xu, Zhihai and Li, Qi and Chen, Shiqi and Chen, Yueting}, title = {Video-Rate Spectral Imaging Based on Diffractive-Refractive Hybrid Optics}, journal = {Laser \& Photonics Reviews}, volume = {18}, number = {12}, pages = {2400646}, keywords = {computational imaging, computational spectral imaging, deep learning, diffractive optical element, video-rate}, doi = {https://doi.org/10.1002/lpor.202400646}, url = {https://onlinelibrary.wiley.com/doi/abs/10.1002/lpor.202400646}, year = {2024}, } -

End-to-end automatic lens design with a differentiable diffraction modelWenguan Zhang, Zheng Ren, Jingwen Zhou, and 5 more authorsOpt. Express, Dec 2024

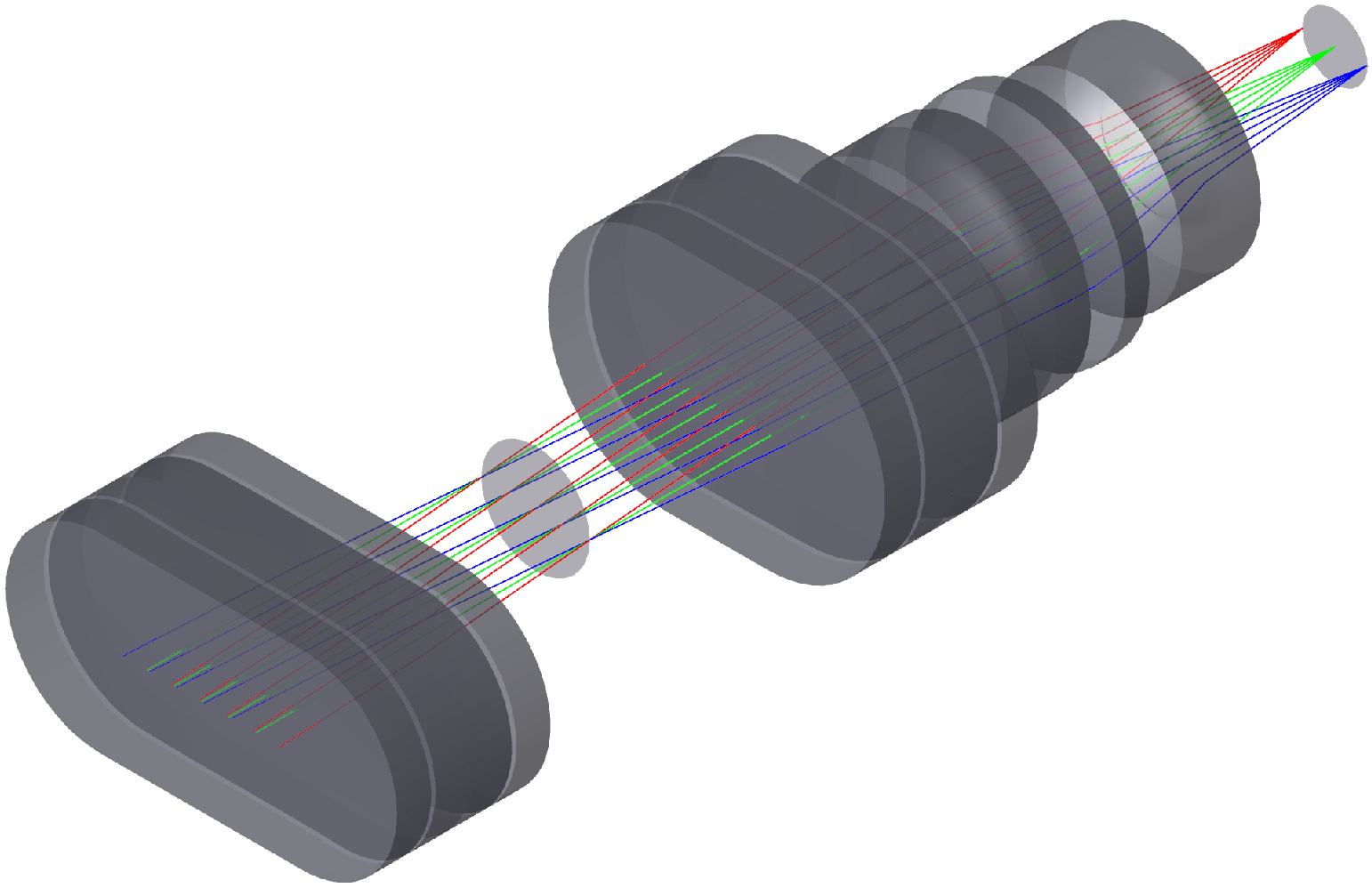

End-to-end automatic lens design with a differentiable diffraction modelWenguan Zhang, Zheng Ren, Jingwen Zhou, and 5 more authorsOpt. Express, Dec 2024The lens design is challenging and time-consuming, requiring tedious human trial and error. Recently, joint design of lens and image processing networks based on differentiable ray tracing techniques has emerged, which provides the possibility to reduce the difficulty of traditional lens design. However, existing joint design pipelines cannot optimize all parameters, including materials and high-order aspheric terms, nor do they use diffraction theory to calculate point spread functions (PSFs) accurately. In this work, we propose a fully automated joint design framework, especially for smartphone telephoto lenses, which starts from optical design indicators, uses Delano diagrams to calculate reasonable optical initial structures, and jointly optimizes the lens system and the image processing network. Considering the diffraction effect, a differentiable PSF calculation method based on the Fresnel-Kirchhoff diffraction model is used for end-to-end joint optimization. This work can reduce the difficulty of the lens design and provide an accurate PSF calculation method considering the diffraction effect for end-to-end joint optimization.

@article{Zhang:24, author = {Zhang, Wenguan and Ren, Zheng and Zhou, Jingwen and Chen, Shiqi and Feng, Huajun and Li, Qi and Xu, Zhihai and Chen, Yueting}, journal = {Opt. Express}, keywords = {Diffractive optical elements; Imaging systems; Lens design; Optical components; Optical systems; Systems design}, number = {25}, pages = {44328--44345}, publisher = {Optica Publishing Group}, title = {End-to-end automatic lens design with a differentiable diffraction model}, volume = {32}, month = dec, year = {2024}, doi = {10.1364/OE.540590}, } -

Calibration-free deep optics for depth estimation with precise simulationZhengyue Zhuge, Hao Xu, Shiqi Chen, and 5 more authorsOptics and Lasers in Engineering, Dec 2024

Calibration-free deep optics for depth estimation with precise simulationZhengyue Zhuge, Hao Xu, Shiqi Chen, and 5 more authorsOptics and Lasers in Engineering, Dec 2024Monocular depth estimation is an important computer vision task widely explored in fields such as autonomous driving, robotics, and more. Recently, deep optics approaches that optimize diffractive optical elements (DOEs) with differentiable frameworks have improved depth estimation performance. However, they only consider on-axis point spread functions (PSFs) and are highly dependent on system calibration. We propose a precise end-to-end paradigm combining ray tracing and angular spectrum diffraction. With this approach, we jointly trained a DOE and a reconstruction network for depth estimation and image restoration. Compared with conventional deep optics approaches, we accurately simulate both on-axis and off-axis PSFs, eliminating the need for calibration. We have validated the high similarity between captured PSFs and simulated ones at 19.4∘ field-of-view (FOV). Our optimized phase mask and network achieve state-of-the-art performance among semantic-based monocular depth estimation and existing deep optics methods. During real-world experiments, our prototype camera shows depth distributions similar to the Intel D455 infrared structured light camera.

-

Wavelength encoding spectral imaging based on the combination of deeply learned filters and an RGB cameraHao Xu, Shiqi Chen, Haiquan Hu, and 7 more authorsOpt. Express, Mar 2024

Wavelength encoding spectral imaging based on the combination of deeply learned filters and an RGB cameraHao Xu, Shiqi Chen, Haiquan Hu, and 7 more authorsOpt. Express, Mar 2024Hyperspectral imaging is a critical tool for gathering spatial-spectral information in various scientific research fields. As a result of improvements in spectral reconstruction algorithms, significant progress has been made in reconstructing hyperspectral images from commonly acquired RGB images. However, due to the limited input, reconstructing spectral information from RGB images is ill-posed. Furthermore, conventional camera color filter arrays (CFA) are designed for human perception and are not optimal for spectral reconstruction. To increase the diversity of wavelength encoding, we propose to place broadband encoding filters in front of the RGB camera. In this condition, the spectral sensitivity of the imaging system is determined by the filters and the camera itself. To achieve an optimal encoding scheme, we use an end-to-end optimization framework to automatically design the filters’ transmittance functions and optimize the weights of the spectral reconstruction network. Simulation experiments show that our proposed spectral reconstruction network has excellent spectral mapping capabilities. Additionally, our novel joint wavelength encoding imaging framework is superior to traditional RGB imaging systems. We develop the deeply learned filter and conduct actual shooting experiments. The spectral reconstruction results have an attractive spatial resolution and spectral accuracy.

@article{Xu:24, author = {Xu, Hao and Chen, Shiqi and Hu, Haiquan and Luo, Peng and Jin, Zheyan and Li, Qi and Xu, Zhihai and Feng, Huajun and Chen, Yueting and Jiang, Tingting}, journal = {Opt. Express}, keywords = {Computational imaging; Diffractive optical elements; Imaging systems; Imaging techniques; Spatial resolution; Spectral properties}, number = {7}, pages = {10741--10760}, publisher = {Optica Publishing Group}, title = {Wavelength encoding spectral imaging based on the combination of deeply learned filters and an RGB camera}, volume = {32}, month = mar, year = {2024}, doi = {10.1364/OE.506997}, } -

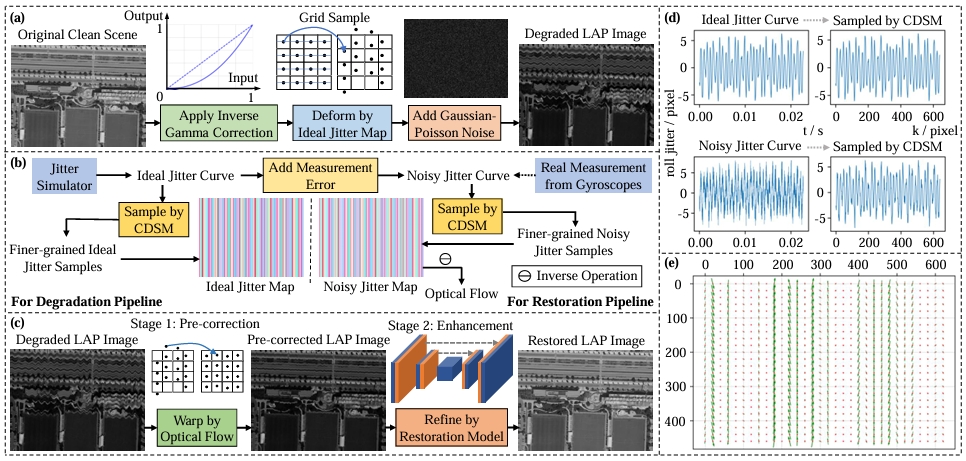

Deep Linear Array Pushbroom Image Restoration: A Degradation Pipeline and Jitter-Aware Restoration NetworkZida Chen, Ziran Zhang, Haoying Li, and 6 more authorsJan 2024

Deep Linear Array Pushbroom Image Restoration: A Degradation Pipeline and Jitter-Aware Restoration NetworkZida Chen, Ziran Zhang, Haoying Li, and 6 more authorsJan 2024Linear Array Pushbroom (LAP) imaging technology is widely used in the realm of remote sensing. However, images acquired through LAP always suffer from distortion and blur because of camera jitter. Traditional methods for restoring LAP images, such as algorithms estimating the point spread function (PSF), exhibit limited performance. To tackle this issue, we propose a Jitter-Aware Restoration Network (JARNet), to remove the distortion and blur in two stages. In the first stage, we formulate an Optical Flow Correction (OFC) block to refine the optical flow of the degraded LAP images, resulting in pre-corrected images where most of the distortions are alleviated. In the second stage, for further enhancement of the pre-corrected images, we integrate two jitter-aware techniques within the Spatial and Frequency Residual (SFRes) block: 1) introducing Coordinate Attention (CoA) to the SFRes block in order to capture the jitter state in orthogonal direction; 2) manipulating image features in both spatial and frequency domains to leverage local and global priors. Additionally, we develop a data synthesis pipeline, which applies Continue Dynamic Shooting Model (CDSM) to simulate realistic degradation in LAP images. Both the proposed JARNet and LAP image synthesis pipeline establish a foundation for addressing this intricate challenge. Extensive experiments demonstrate that the proposed two-stage method outperforms state-of-the-art image restoration models. Code is available at https://github.com/JHW2000/JARNet.

@misc{chen2024deep, title = {Deep Linear Array Pushbroom Image Restoration: A Degradation Pipeline and Jitter-Aware Restoration Network}, author = {Chen, Zida and Zhang, Ziran and Li, Haoying and Li, Menghao and Chen, Yueting and Li, Qi and Feng, Huajun and Xu, Zhihai and Chen*, Shiqi}, month = jan, year = {2024}, eprint = {2401.08171}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, } -

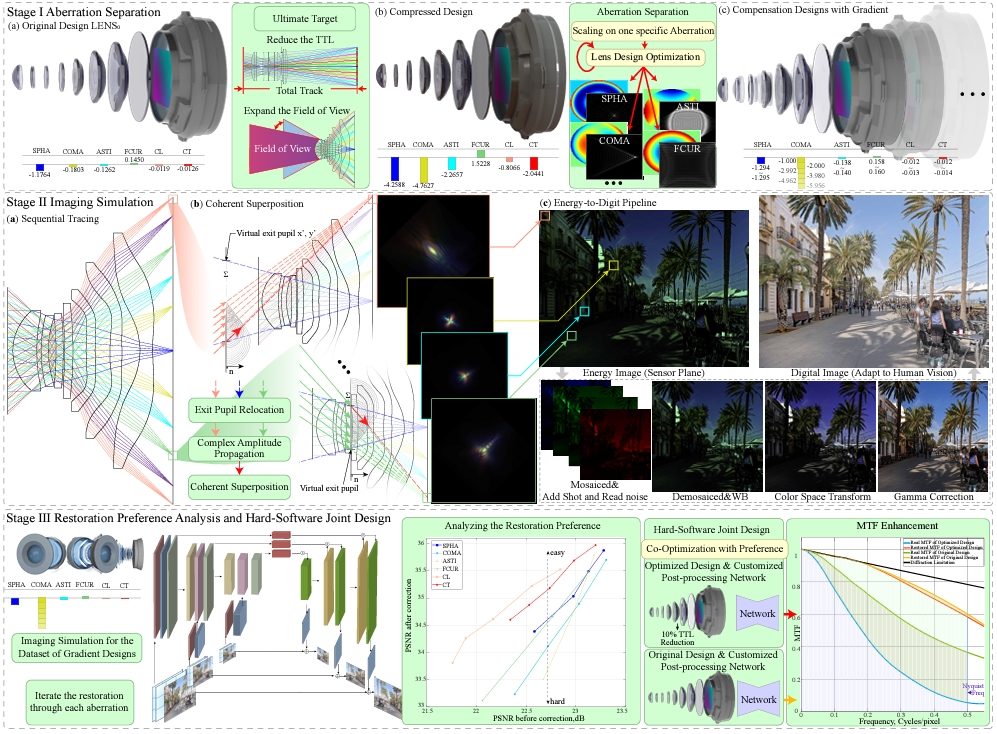

Revealing the preference for correcting separated aberrations in joint optic-image designJingwen Zhou, Shiqi Chen*, Zheng Ren, and 5 more authorsOptics and Lasers in Engineering, Jan 2024

Revealing the preference for correcting separated aberrations in joint optic-image designJingwen Zhou, Shiqi Chen*, Zheng Ren, and 5 more authorsOptics and Lasers in Engineering, Jan 2024The joint design of the optical system and the downstream algorithm is a challenging and promising task. Due to the demand for balancing the global optima of imaging systems and the computational cost of physical simulation, existing methods cannot achieve efficient joint design of complex systems such as smartphones and drones. In this work, starting from the perspective of the optical design, we characterize the optics with separated aberrations. Additionally, to bridge the hardware and software without gradients, an image simulation system is presented to reproduce the genuine imaging procedure of lenses with large field-of-views. As for aberration correction, we propose a network to perceive and correct the spatially varying aberrations and validate its superiority over state-of-the-art methods. Comprehensive experiments reveal that the preference for correcting separated aberrations in joint design is as follows: longitudinal chromatic aberration, lateral chromatic aberration, spherical aberration, field curvature, and coma, with astigmatism coming last. Drawing from the preference, a 10% reduction in the total track length of the consumer-level mobile phone lens module is accomplished. Moreover, this procedure spares more space for manufacturing deviations, realizing extreme-quality enhancement of computational photography. The optimization paradigm provides innovative insight into the practical joint design of sophisticated optical systems and post-processing algorithms.

@article{ZHOU2024108220, title = {Revealing the preference for correcting separated aberrations in joint optic-image design}, journal = {Optics and Lasers in Engineering}, volume = {178}, pages = {108220}, year = {2024}, issn = {0143-8166}, doi = {https://doi.org/10.1016/j.optlaseng.2024.108220}, url = {https://www.sciencedirect.com/science/article/pii/S0143816624001994}, author = {Zhou, Jingwen and Chen*, Shiqi and Ren, Zheng and Zhang, Wenguan and Yan, Jiapu and Feng, Huajun and Li, Qi and Chen, Yueting}, keywords = {Joint optic-image design, Aberration correction preference, Manufacturing deviations, Imaging simulation, Computational photography}, }

2023

-

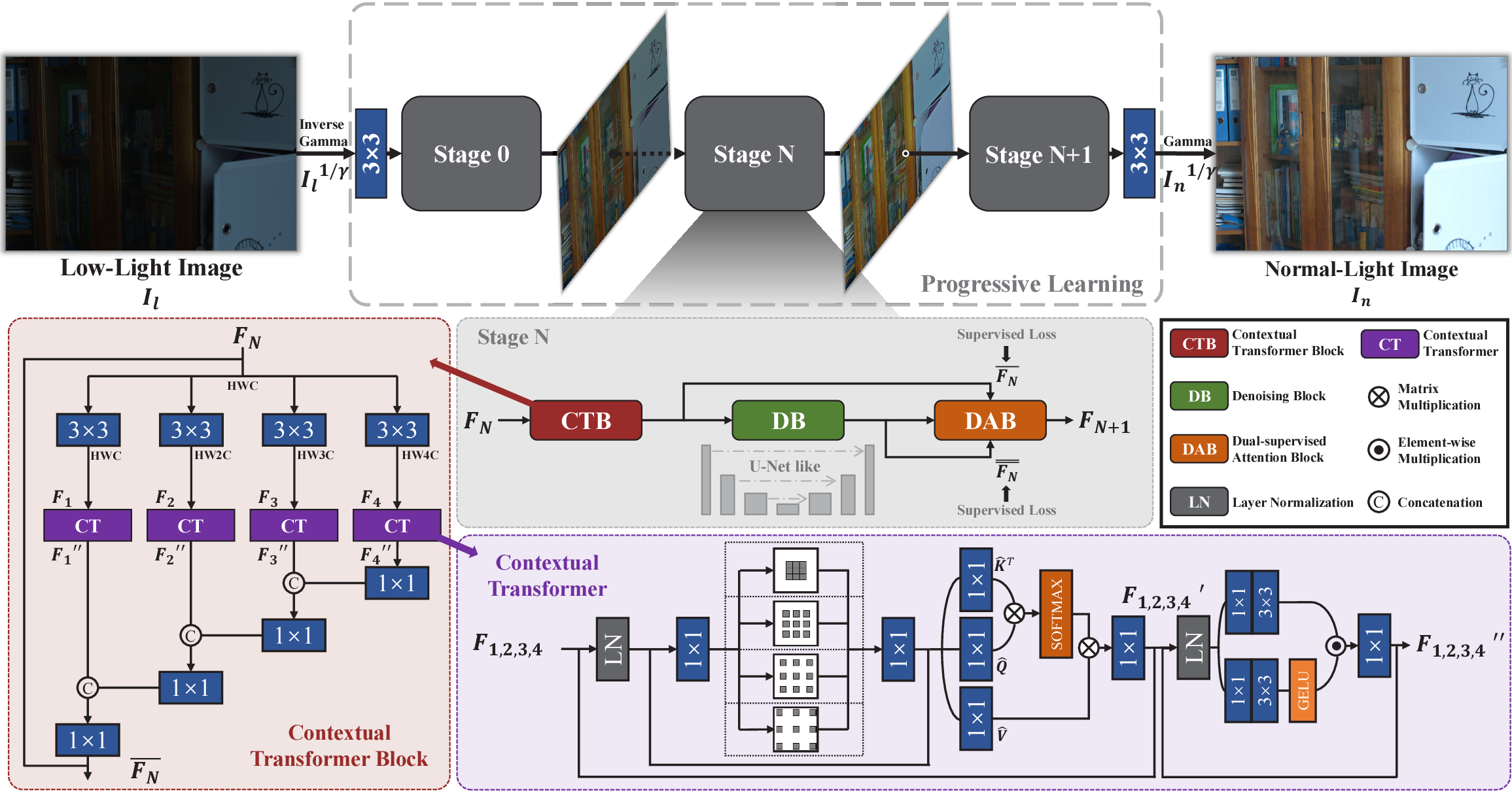

Dark2Light: multi-stage progressive learning model for low-light image enhancementRui-Kang Li, Meng-Hao Li, Shiqi Chen*, and 2 more authorsOpt. Express, Dec 2023

Dark2Light: multi-stage progressive learning model for low-light image enhancementRui-Kang Li, Meng-Hao Li, Shiqi Chen*, and 2 more authorsOpt. Express, Dec 2023Due to severe noise and extremely low illuminance, restoring from low-light images to normal-light images remains challenging. Unpredictable noise can tangle the weak signals, making it difficult for models to learn signals from low-light images, while simply restoring the illumination can lead to noise amplification. To address this dilemma, we propose a multi-stage model that can progressively restore normal-light images from low-light images, namely Dark2Light. Within each stage, We divide the low-light image enhancement (LLIE) into two main problems: (1) illumination enhancement and (2) noise removal. Firstly, we convert the image space from sRGB to linear RGB to ensure that illumination enhancement is approximately linear, and design a contextual transformer block to conduct illumination enhancement in a coarse-to-fine manner. Secondly, a U-Net shaped denoising block is adopted for noise removal. Lastly, we design a dual-supervised attention block to facilitate progressive restoration and feature transfer. Extensive experimental results demonstrate that the proposed Dark2Light outperforms the state-of-the-art LLIE methods both quantitatively and qualitatively.

@article{Li:23, author = {Li, Rui-Kang and Li, Meng-Hao and Chen*, Shiqi and Chen, Yue-Ting and Xu, Zhi-Hai}, journal = {Opt. Express}, keywords = {Illuminance; Image enhancement; Image metrics; Imaging techniques; Neural networks; Shot noise}, number = {26}, pages = {42887--42900}, publisher = {Optica Publishing Group}, title = {Dark2Light: multi-stage progressive learning model for low-light image enhancement}, volume = {31}, month = dec, year = {2023}, doi = {10.1364/OE.507966}, } -

Design of an optimized Alvarez lens based on the fifth-order polynomial combinationZhichao Ye, Jiapu Yan, Tingting Jiang, and 5 more authorsAppl. Opt., Dec 2023

Design of an optimized Alvarez lens based on the fifth-order polynomial combinationZhichao Ye, Jiapu Yan, Tingting Jiang, and 5 more authorsAppl. Opt., Dec 2023This paper proposes an optimized design of the Alvarez lens by utilizing a combination of three fifth-order X-Y polynomials. It can effectively minimize the curvature of the lens surface to meet the manufacturing requirements. The phase modulation function and aberration of the proposed lens are evaluated by using first-order optical analysis. Simulations compare the proposed lens with the traditional Alvarez lens in terms of surface curvature, zoom capability, and imaging quality. The results demonstrate the exceptional performance of the proposed lens, achieving a remarkable 26.36% reduction in the maximum curvature of the Alvarez lens (with a coefficient A value of 4\texttimes10\textminus4 and a diameter of 26 mm) while preserving its original zoom capability and imaging quality.

@article{Ye:23, author = {Ye, Zhichao and Yan, Jiapu and Jiang, Tingting and Chen, Shiqi and Xu, Zhihai and Feng, Huajun and Li, Qi and Chen, Yueting}, journal = {Appl. Opt.}, keywords = {Freeform surfaces; Lens design; Optical elements; Optical imaging; Optical systems; Phase modulation}, number = {34}, pages = {9072--9081}, publisher = {Optica Publishing Group}, title = {Design of an optimized Alvarez lens based on the\&\#x00A0;fifth-order polynomial combination}, volume = {62}, month = dec, year = {2023}, doi = {10.1364/AO.501295}, } -

Image restoration for optical zooming system based on Alvarez lensesJiapu Yan, Zhichao Ye, Tingting Jiang, and 5 more authorsOpt. Express, Oct 2023

Image restoration for optical zooming system based on Alvarez lensesJiapu Yan, Zhichao Ye, Tingting Jiang, and 5 more authorsOpt. Express, Oct 2023Alvarez lenses are known for their ability to achieve a broad range of optical power adjustment by utilizing complementary freeform surfaces. However, these lenses suffer from optical aberrations, which restrict their potential applications. To address this issue, we propose a field of view (FOV) attention image restoration model for continuous zooming. In order to simulate the degradation of optical zooming systems based on Alvarez lenses (OZA), a baseline OZA is designed where the polynomial for the Alvarez lenses consists of only three coefficients. By computing spatially varying point spread functions (PSFs), we simulate the degraded images of multiple zoom configurations and conduct restoration experiments. The results demonstrate that our approach surpasses the compared methods in the restoration of degraded images across various zoom configurations while also exhibiting strong generalization capabilities under untrained configurations.

@article{Yan:23, author = {Yan, Jiapu and Ye, Zhichao and Jiang, Tingting and Chen, Shiqi and Feng, Huajun and Xu, Zhihai and Li, Qi and Chen, Yueting}, journal = {Opt. Express}, keywords = {Diffractive optical elements; Image restoration; Imaging systems; Optical aberration; Optical systems; Systems design}, number = {22}, pages = {35765--35776}, publisher = {Optica Publishing Group}, title = {Image restoration for optical zooming system based on Alvarez lenses}, volume = {31}, month = oct, year = {2023}, doi = {10.1364/OE.500967}, } -

Reliable Image Dehazing by NeRFZheyan Jin, Shiqi Chen, Huajun Feng, and 3 more authorsMay 2023

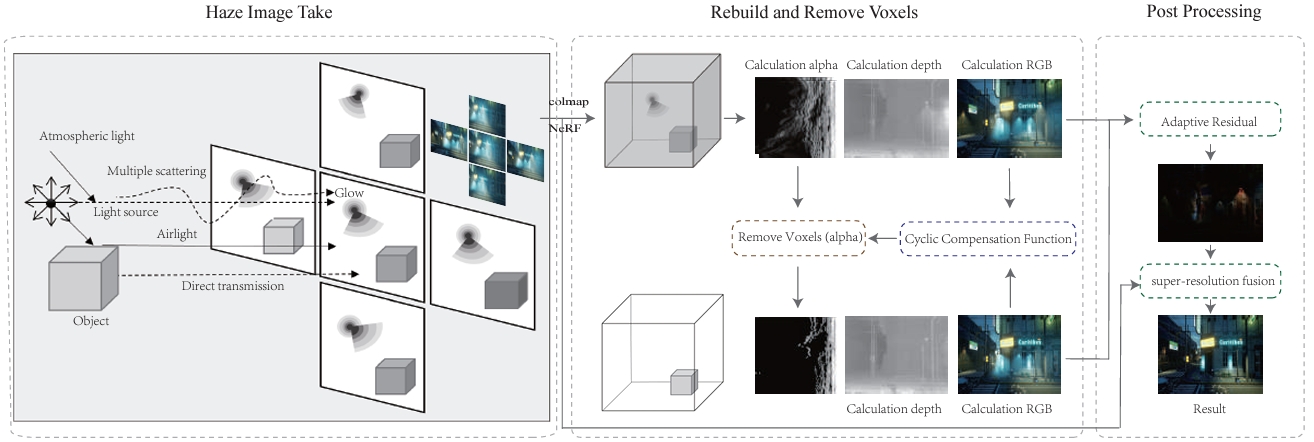

Reliable Image Dehazing by NeRFZheyan Jin, Shiqi Chen, Huajun Feng, and 3 more authorsMay 2023We present an image dehazing algorithm with high quality, wide application, and no data training or prior needed. We analyze the defects of the original dehazing model, and propose a new and reliable dehazing reconstruction and dehazing model based on the combination of optical scattering model and computer graphics lighting rendering model. Based on the new haze model and the images obtained by the cameras, we can reconstruct the three-dimensional space, accurately calculate the objects and haze in the space, and use the transparency relationship of haze to perform accurate haze removal. To obtain a 3D simulation dataset we used the Unreal 5 computer graphics rendering engine. In order to obtain real shot data in different scenes, we used fog generators, array cameras, mobile phones, underwater cameras and drones to obtain haze data. We use formula derivation, simulation data set and real shot data set result experimental results to prove the feasibility of the new method. Compared with various other methods, we are far ahead in terms of calculation indicators (4 dB higher quality average scene), color remains more natural, and the algorithm is more robust in different scenarios and best in the subjective perception.

@misc{jin2023reliable, title = {Reliable Image Dehazing by NeRF}, author = {Jin, Zheyan and Chen, Shiqi and Feng, Huajun and Xu, Zhihai and Li, Qi and Chen, Yueting}, month = may, year = {2023}, eprint = {2303.09153}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, } -

Let Segment Anything Help Image DehazeZheyan Jin, Shiqi Chen, Yueting Chen, and 2 more authorsJun 2023

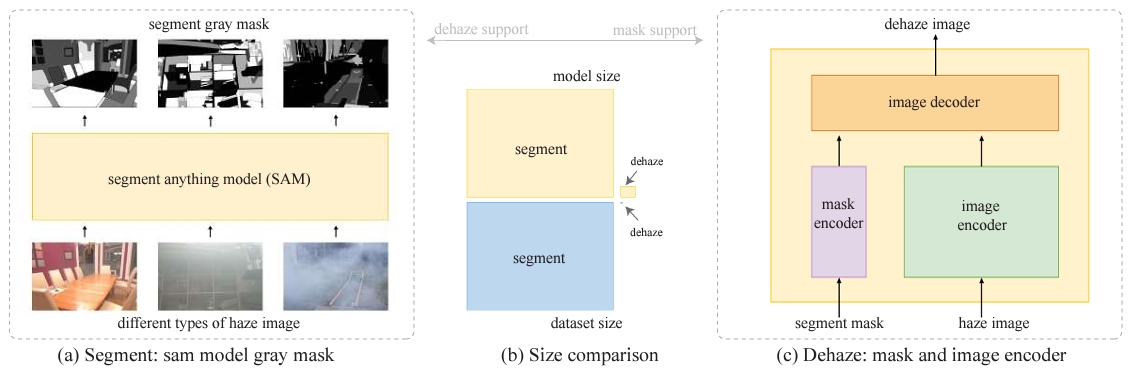

Let Segment Anything Help Image DehazeZheyan Jin, Shiqi Chen, Yueting Chen, and 2 more authorsJun 2023The large language model and high-level vision model have achieved impressive performance improvements with large datasets and model sizes. However, low-level computer vision tasks, such as image dehaze and blur removal, still rely on a small number of datasets and small-sized models, which generally leads to overfitting and local optima. Therefore, we propose a framework to integrate large-model prior into low-level computer vision tasks. Just as with the task of image segmentation, the degradation of haze is also texture-related. So we propose to detect gray-scale coding, network channel expansion, and pre-dehaze structures to integrate large-model prior knowledge into any low-level dehazing network. We demonstrate the effectiveness and applicability of large models in guiding low-level visual tasks through different datasets and algorithms comparison experiments. Finally, we demonstrate the effect of grayscale coding, network channel expansion, and recurrent network structures through ablation experiments. Under the conditions where additional data and training resources are not required, we successfully prove that the integration of large-model prior knowledge will improve the dehaze performance and save training time for low-level visual tasks.

@misc{jin2023let, title = {Let Segment Anything Help Image Dehaze}, author = {Jin, Zheyan and Chen, Shiqi and Chen, Yueting and Xu, Zhihai and Feng, Huajun}, month = jun, year = {2023}, eprint = {2306.15870}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, } -

Toward Real Flare Removal: A Comprehensive Pipeline and A New BenchmarkZheyan Jin, Shiqi Chen, Huajun Feng, and 2 more authorsJul 2023

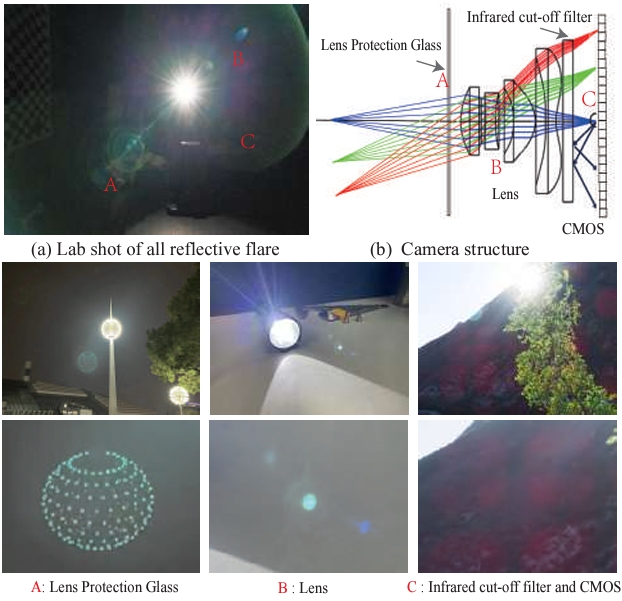

Toward Real Flare Removal: A Comprehensive Pipeline and A New BenchmarkZheyan Jin, Shiqi Chen, Huajun Feng, and 2 more authorsJul 2023Photographing in the under-illuminated scenes, the presence of complex light sources often leave strong flare artifacts in images, where the intensity, the spectrum, the reflection, and the aberration altogether contribute the deterioration. Besides the image quality, it also influence the performance of down-stream visual applications. Thus, removing the lens flare and ghosts is a challenge issue especially in low-light environment. However, existing methods for flare removal mainly restricted to the problems of inadequate simulation and real-world capture, where the categories of scattered flares are singular and the reflected ghosts are unavailable. Therefore, a comprehensive deterioration procedure is crucial for constructing the dataset of flare removal. Based on the theoretical analysis and real-world evaluation, we propose a well-developed methodology for generating the data-pairs with flare deterioration. The procedure is comprehensive, where the similarity of scattered flares and the symmetric effect of reflected ghosts are realized. Moreover, we also construct a real-shot pipeline that respectively processes the effects of scattering and reflective flares, aiming to directly generate the data for end-to-end methods. Experimental results show that the proposed methodology add diversity to the existing flare datasets and construct a comprehensive mapping procedure for flare data pairs. And our method facilities the data-driven model to realize better restoration in flare images and proposes a better evaluation system based on real shots, resulting promote progress in the area of real flare removal.

@misc{jin2023real, title = {Toward Real Flare Removal: A Comprehensive Pipeline and A New Benchmark}, author = {Jin, Zheyan and Chen, Shiqi and Feng, Huajun and Xu, Zhihai and Chen, Yueting}, month = jul, year = {2023}, eprint = {2306.15884}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, } -

Imaging Simulation and Learning-Based Image Restoration for Remote Sensing Time Delay and Integration CamerasMenghao Li, Ziran Zhang, Shiqi Chen, and 4 more authorsIEEE Transactions on Geoscience and Remote Sensing, Aug 2023

Imaging Simulation and Learning-Based Image Restoration for Remote Sensing Time Delay and Integration CamerasMenghao Li, Ziran Zhang, Shiqi Chen, and 4 more authorsIEEE Transactions on Geoscience and Remote Sensing, Aug 2023Time delay and integration (TDI) cameras are widely used in remote sensing areas because they capture high-resolution and high signal-to-noise ratio (SNR) images and images in low-light environments. However, the image quality captured by TDI cameras may be affected by many degradation factors, including jitter, charge transfer time mismatch, and drift angle. Moreover, compared with the single-line push-broom cameras and area gaze cameras used in remote sensing, the degraded effect of the TDI camera may accumulate during the charge accumulation process. In this article, we present a fast imaging simulation method for remote sensing TDI cameras based on image resampling that can accurately simulate the degraded image quality affected by different degradation factors. The simulated image pairs can provide a sufficient dataset for modern supervised-learning image restoration methods. In addition, we present a novel network, containing a row-attention block and row-encoder block to help resolve the row-variant blur to resolve the degraded images. We test our image restoration method on the simulated degraded image datasets and real images; the results show that the proposed method can effectively restore degraded images. Our restoration method does not rely on auxiliary information detected by high-frequency sensors or multispectral bands, and it achieves better results than other blind restoration methods.

@article{10198314, author = {Li, Menghao and Zhang, Ziran and Chen, Shiqi and Xu, Zhihai and Li, Qi and Feng, Huajun and Chen, Yueting}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, title = {Imaging Simulation and Learning-Based Image Restoration for Remote Sensing Time Delay and Integration Cameras}, month = aug, year = {2023}, volume = {61}, number = {}, pages = {1-17}, keywords = {Cameras;Image restoration;Imaging;Degradation;Remote sensing;Jitter;Charge transfer;Deep learning-based image restoration;degraded factors;imaging simulation;time delay and integration (TDI) camera}, doi = {10.1109/TGRS.2023.3300549}, } -

Computational Optics for Mobile Terminals in Mass ProductionShiqi Chen, Ting Lin, Huajun Feng, and 3 more authorsIEEE Transactions on Pattern Analysis and Machine Intelligence, Apr 2023

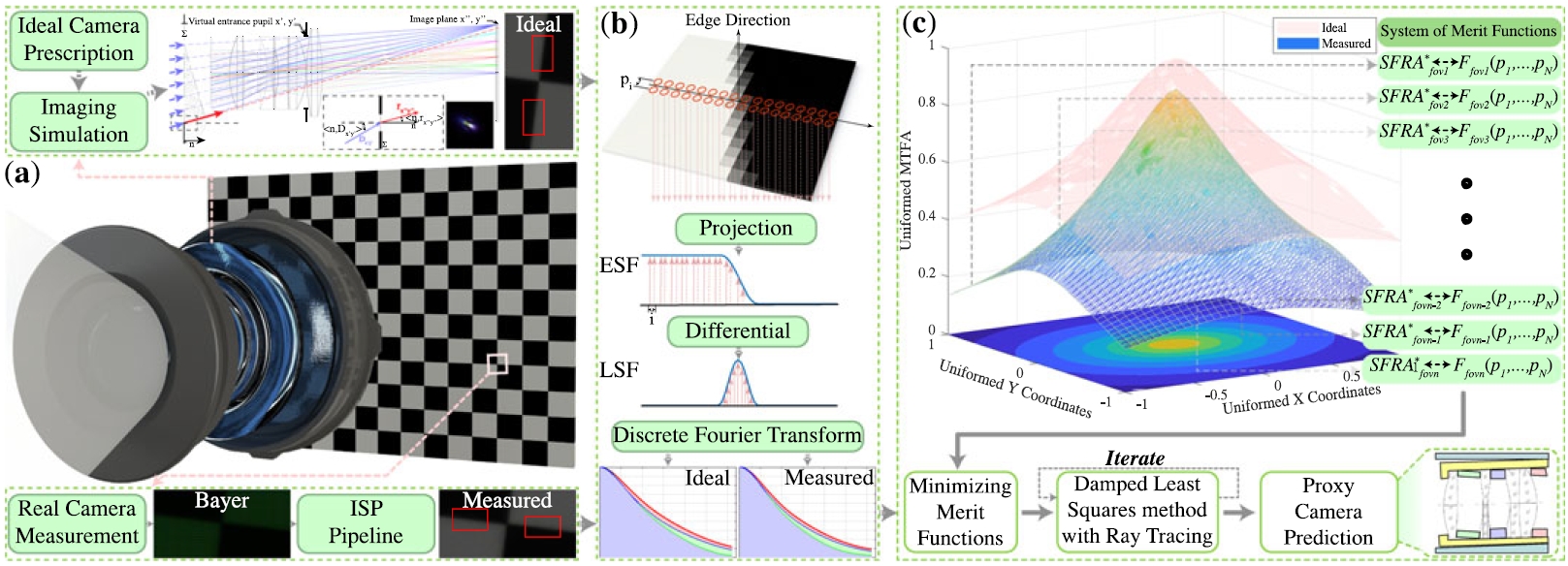

Computational Optics for Mobile Terminals in Mass ProductionShiqi Chen, Ting Lin, Huajun Feng, and 3 more authorsIEEE Transactions on Pattern Analysis and Machine Intelligence, Apr 2023Correcting the optical aberrations and the manufacturing deviations of cameras is a challenging task. Due to the limitation on volume and the demand for mass production, existing mobile terminals cannot rectify optical degradation. In this work, we systematically construct the perturbed lens system model to illustrate the relationship between the deviated system parameters and the spatial frequency response (SFR) measured from photographs. To further address this issue, an optimization framework is proposed based on this model to build proxy cameras from the machining samples’ SFRs. Engaging with the proxy cameras, we synthetic data pairs, which encode the optical aberrations and the random manufacturing biases, for training the learning-based algorithms. In correcting aberration, although promising results have been shown recently with convolutional neural networks, they are hard to generalize to stochastic machining biases. Therefore, we propose a dilated Omni-dimensional dynamic convolution (DOConv) and implement it in post-processing to account for the manufacturing degradation. Extensive experiments which evaluate multiple samples of two representative devices demonstrate that the proposed optimization framework accurately constructs the proxy camera. And the dynamic processing model is well-adapted to manufacturing deviations of different cameras, realizing perfect computational photography. The evaluation shows that the proposed method bridges the gap between optical design, system machining, and post-processing pipeline, shedding light on the joint of image signal reception (lens and sensor) and image signal processing (ISP).

@article{Chen_2022_TPAMI, author = {Chen, Shiqi and Lin, Ting and Feng, Huajun and Xu, Zhihai and Li, Qi and Chen, Yueting}, journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence}, title = {Computational Optics for Mobile Terminals in Mass Production}, month = apr, year = {2023}, volume = {45}, number = {4}, pages = {4245-4259}, } -

Hyperspectral image reconstruction based on the fusion of diffracted rotation blurred and clear imagesHao Xu, Haiquan Hu, Shiqi Chen, and 4 more authorsOptics and Lasers in Engineering, Jan 2023

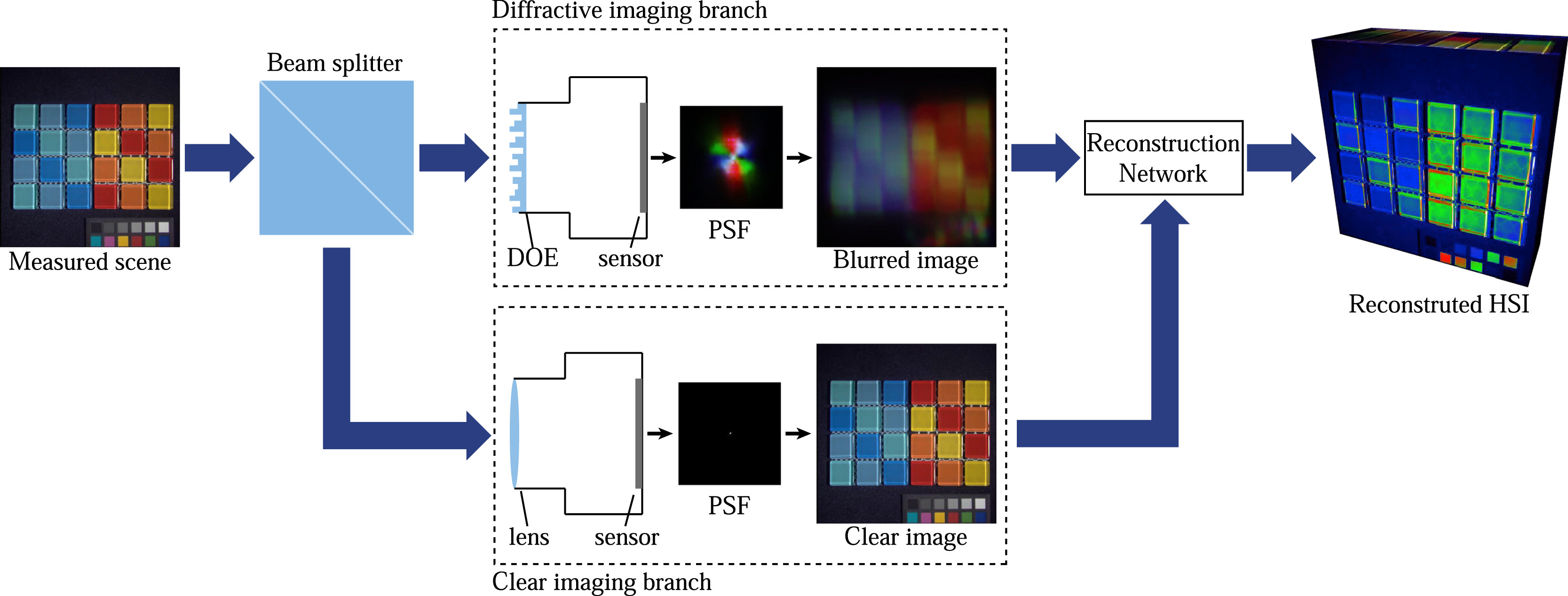

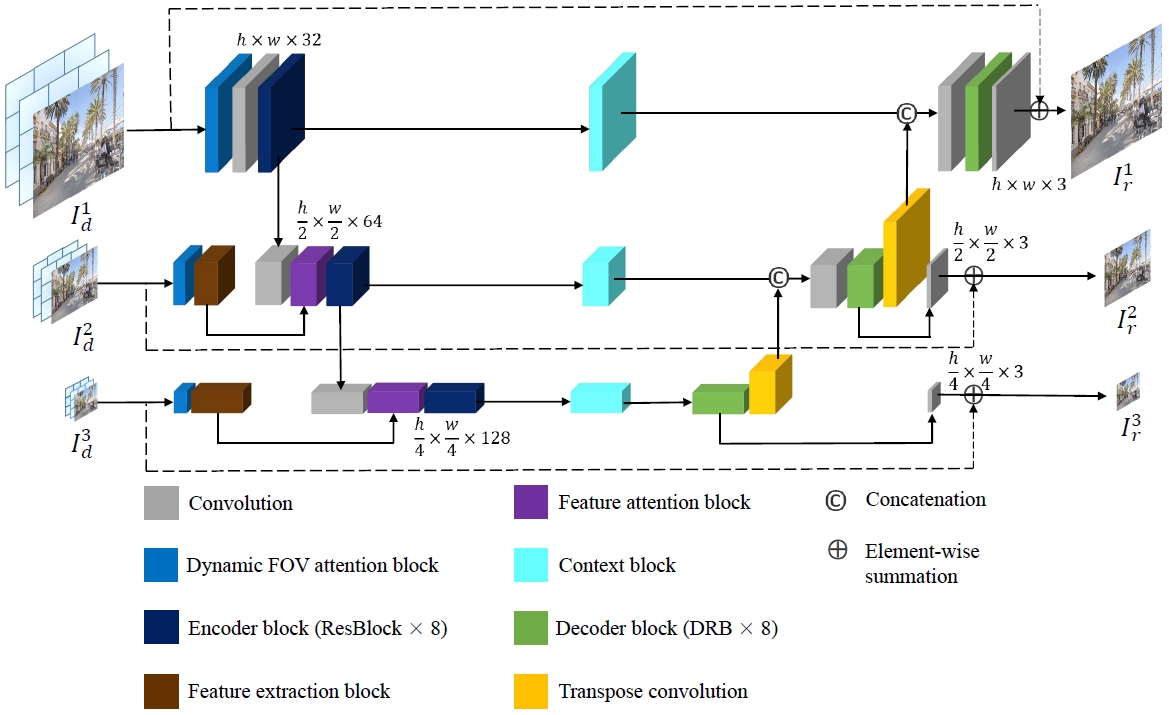

Hyperspectral image reconstruction based on the fusion of diffracted rotation blurred and clear imagesHao Xu, Haiquan Hu, Shiqi Chen, and 4 more authorsOptics and Lasers in Engineering, Jan 2023To overcome the problems of imaging speed and bulky volume of the traditional hyperspectral imaging systems, the recently proposed compact, snapshot hyperspectral imaging system with diffracted rotation has attracted a lot of interest. Due to the severe degradation of the diffracted rotation blurred image, the restored hyperspectral image (HSI) suffers from a lack of spatial detail information and spectral accuracy. To improve the quality of the reconstructed HSI, we present a joint imaging system of diffractive imaging and clear imaging as well as a convolutional neural network (CNN) based method with two input branches for HSI reconstruction. In the reconstruction network, we develop a feature extraction block (FEB) to extract the features of the two input images, respectively. Subsequently, a double residual block (DRB) is designed to fuse and reconstruct the extracted features. Experimental results show that HSI with high spatial resolution and spectral accuracy can be reconstructed. Our method outperforms the state-of-the-art methods in terms of quantitative metrics and visual quality.

@article{XU_2023_OLEN, title = {Hyperspectral image reconstruction based on the fusion of diffracted rotation blurred and clear images}, journal = {Optics and Lasers in Engineering}, volume = {160}, pages = {107274}, month = jan, year = {2023}, issn = {0143-8166}, url = {https://www.sciencedirect.com/science/article/pii/S014381662200327X}, author = {Xu, Hao and Hu, Haiquan and Chen, Shiqi and Xu, Zhihai and Li, Qi and Jiang, Tingting and Chen, Yueting}, keywords = {Hyperspectral image reconstruction, Hyperspectral imaging, Diffraction, Image fusion, Convolutional neural network}, } -

Direct distortion prediction method for AR-HUD dynamic distortion correctionFangzheng Yu, Nan Xu, Shiqi Chen, and 5 more authorsAppl. Opt., Jul 2023

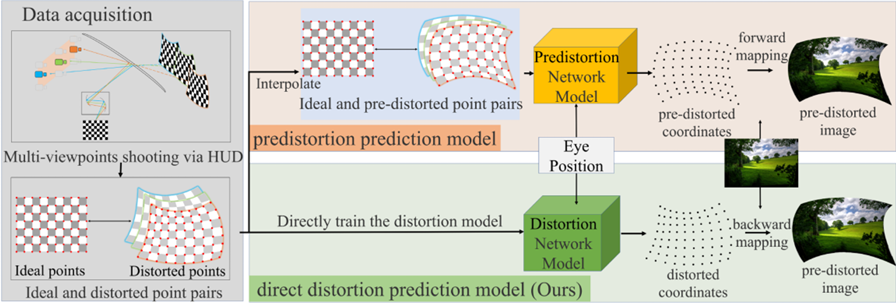

Direct distortion prediction method for AR-HUD dynamic distortion correctionFangzheng Yu, Nan Xu, Shiqi Chen, and 5 more authorsAppl. Opt., Jul 2023Dynamic distortion is one of the most critical factors affecting the experience of automotive augmented reality head-up displays (AR-HUDs). A wide range of views and the extensive display area result in extraordinarily complex distortions. Existing methods based on the neural network first obtain distorted images and then get the predistorted data for training mostly. This paper proposes a distortion prediction framework based on the neural network. It directly trains the network with the distorted data, realizing dynamic adaptation for AR-HUD distortion correction and avoiding errors in coordinate interpolation. Additionally, we predict the distortion offsets instead of the distortion coordinates and present a field of view (FOV)-weighted loss function based on the spatial-variance characteristic to further improve the prediction accuracy of distortion. Experiments show that our methods improve the prediction accuracy of AR-HUD dynamic distortion without increasing the network complexity or data processing overhead.

@article{Yu_2023_AO, author = {Yu, Fangzheng and Xu, Nan and Chen, Shiqi and Feng, Huajun and Xu, Zhihai and Li, Qi and Jiang, Tingting and Chen, Yueting}, journal = {Appl. Opt.}, keywords = {Augmented reality; Distortion; Image metrics; Image processing; Image resolution; Neural networks}, number = {21}, pages = {5720--5726}, publisher = {Optica Publishing Group}, title = {Direct distortion prediction method for AR-HUD dynamic distortion correction}, volume = {62}, month = jul, year = {2023}, } -

Snapshot hyperspectral imaging based on equalization designed DOENan Xu, Hao Xu, Shiqi Chen, and 6 more authorsOpt. Express, Jun 2023

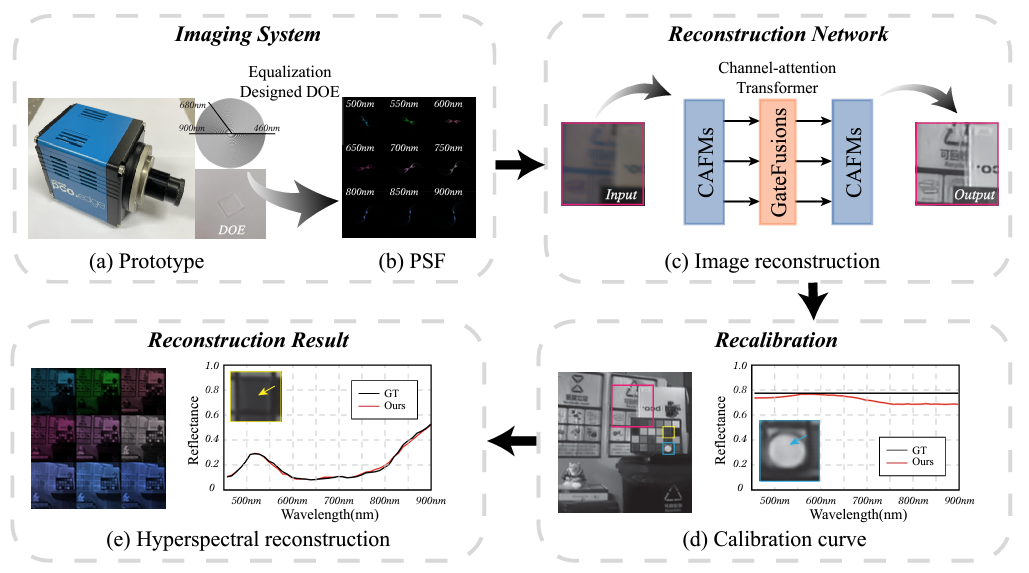

Snapshot hyperspectral imaging based on equalization designed DOENan Xu, Hao Xu, Shiqi Chen, and 6 more authorsOpt. Express, Jun 2023Hyperspectral imaging attempts to determine distinctive information in spatial and spectral domain of a target. Over the past few years, hyperspectral imaging systems have developed towards lighter and faster. In phase-coded hyperspectral imaging systems, a better coding aperture design can improve the spectral accuracy relatively. Using wave optics, we post an equalization designed phase-coded aperture to achieve desired equalization point spread functions (PSFs) which provides richer features for subsequent image reconstruction. During the reconstruction of images, our raised hyperspectral reconstruction network, CAFormer, achieves better results than the state-of-the-art networks with less computation by substituting self-attention with channel-attention. Our work revolves around the equalization design of the phase-coded aperture and optimizes the imaging process from three aspects: hardware design, reconstruction algorithm, and PSF calibration. Our work is putting snapshot compact hyperspectral technology closer to a practical application.

@article{Xu_2023_OE, author = {Xu, Nan and Xu, Hao and Chen, Shiqi and Hu, Haiquan and Xu, Zhihai and Feng, Huajun and Li, Qi and Jiang, Tingting and Chen, Yueting}, journal = {Opt. Express}, keywords = {Diffractive optical elements; Hyperspectral imaging; Image processing; Image quality; Imaging systems; Wave optics}, number = {12}, pages = {20489--20504}, publisher = {Optica Publishing Group}, title = {Snapshot hyperspectral imaging based on equalization designed DOE}, volume = {31}, month = jun, year = {2023}, } -

Mobile image restoration via prior quantizationShiqi Chen, Jingwen Zhou, Menghao Li, and 2 more authorsPattern Recognition Letters, Sep 2023

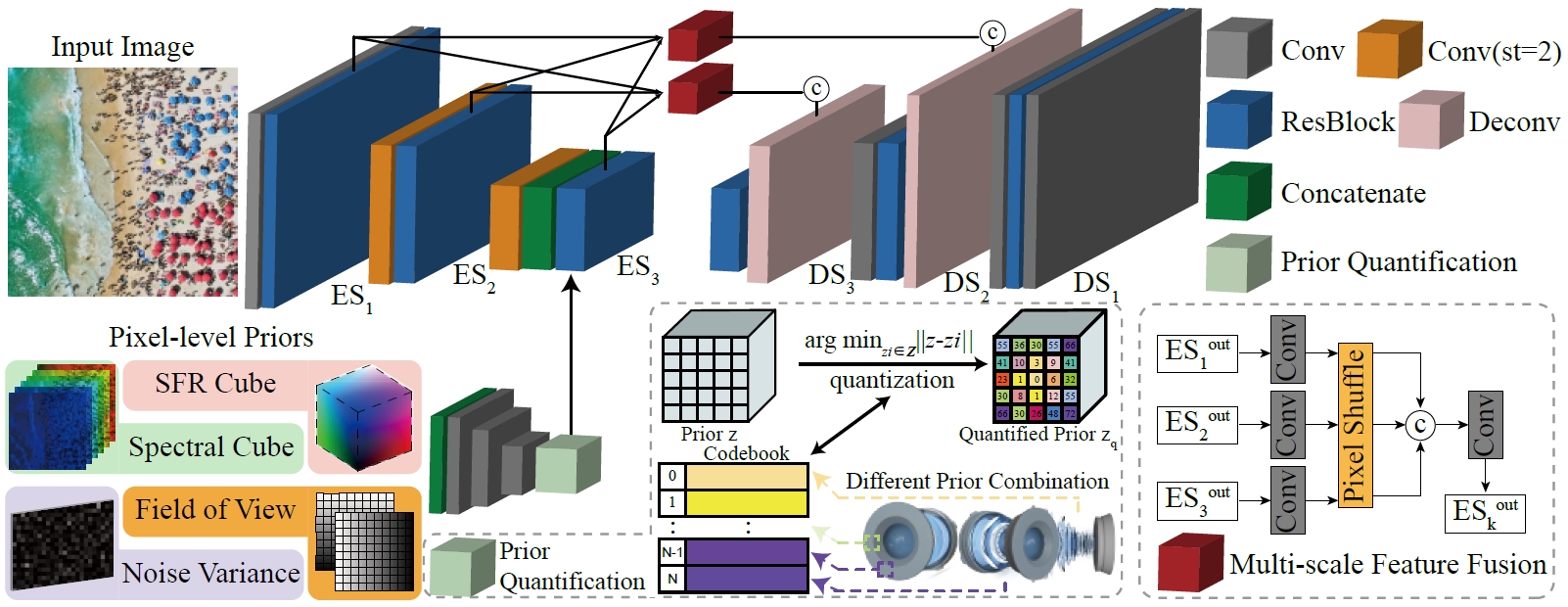

Mobile image restoration via prior quantizationShiqi Chen, Jingwen Zhou, Menghao Li, and 2 more authorsPattern Recognition Letters, Sep 2023In the photograph of mobile terminal, image degradation is a multivariate problem, where the spectral of the scene, the lens imperfections, the sensor noise, and the field of view together contribute to the results. Besides eliminating it at the hardware level, the post-processing system, which utilizes various prior information, is significant for correction. However, due to the content differences among priors, the pipeline that directly aligns these factors shows limited efficiency and unoptimized restoration. Here, we propose a prior quantization model to correct the degradation introduced in the image formation pipeline. To integrate the multivariate messages, we encode various priors into a latent space and quantify them by the learnable codebooks. After quantization, the prior codes are fused with the image restoration branch to realize targeted optical degradation correction. Moreover, we propose a comprehensive synthetic flow to acquire data pairs in a relative low computational overhead. Comprehensive experiments demonstrate the flexibility of the proposed method and validate its potential to accomplish targeted restoration for mass-produced mobile terminals. Furthermore, our model promises to analyze the influence of various priors and the degradation of devices, which is helpful for joint soft-hardware design.

@article{CHEN202364, title = {Mobile image restoration via prior quantization}, journal = {Pattern Recognition Letters}, volume = {174}, pages = {64-70}, month = sep, year = {2023}, issn = {0167-8655}, doi = {https://doi.org/10.1016/j.patrec.2023.08.017}, url = {https://www.sciencedirect.com/science/article/pii/S0167865523002374}, author = {Chen, Shiqi and Zhou, Jingwen and Li, Menghao and Chen, Yueting and Jiang, Tingting}, keywords = {Image restoration, Mobile ISP systems, Computational photography, Deep learning}, } -

DR-UNet: dynamic residual U-Net for blind correction of optical degradationJinwen Zhou, Shiqi Chen, Qi Li, and 2 more authorsIn Conference on Infrared, Millimeter, Terahertz Waves and Applications (IMT2022), May 2023

DR-UNet: dynamic residual U-Net for blind correction of optical degradationJinwen Zhou, Shiqi Chen, Qi Li, and 2 more authorsIn Conference on Infrared, Millimeter, Terahertz Waves and Applications (IMT2022), May 2023Due to the size limitation of mobile devices, their optical design is difficult to reach the level of professional equipment. Corresponding restoration methods are then needed to compensate for the shortage. However, most of the models are still static, which leads to their limited representation ability of images. To tackle this problem, we propose a plug-and-play deformable residual block for efficiently sampling the spatially related features at different scales. Moreover, considering that the optical degradation is closely correlated with the field-of-view (FOV), we introduce a FOV attention block based on omni-dimensional dynamic convolution to integrate spatial features. On this basis, we further propose a novel optical degradation correction model called DR-UNet. It is constructed on an encoder-decoder structure to capture multiscale information, along with several context blocks. By correcting the optical degradation in images from coarse to fine, we finally obtain high-quality and degradation-free images. Extensive results demonstrate that our method can compete favorably with some state-of-the-art methods.

@inproceedings{zhou_2023_IMT, title = {DR-UNet: dynamic residual U-Net for blind correction of optical degradation}, author = {Zhou, Jinwen and Chen, Shiqi and Li, Qi and Li, Tongyue and Feng, Huajun}, booktitle = {Conference on Infrared, Millimeter, Terahertz Waves and Applications (IMT2022)}, volume = {12565}, pages = {326--338}, month = may, year = {2023}, organization = {SPIE}, }

2022

-

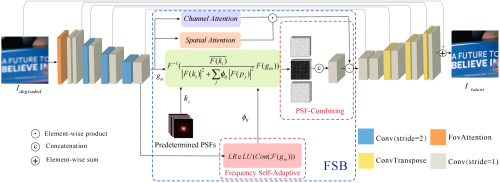

Non-blind optical degradation correction via frequency self-adaptive and finetune tacticsTing Lin, Shiqi Chen*, Huajun Feng, and 3 more authorsOpt. Express, Jun 2022

Non-blind optical degradation correction via frequency self-adaptive and finetune tacticsTing Lin, Shiqi Chen*, Huajun Feng, and 3 more authorsOpt. Express, Jun 2022In mobile photography applications, limited volume constraints the diversity of optical design. In addition to the narrow space, the deviations introduced in mass production cause random bias to the real camera. In consequence, these factors introduce spatially varying aberration and stochastic degradation into the physical formation of an image. Many existing methods obtain excellent performance on one specific device but are not able to quickly adapt to mass production. To address this issue, we propose a frequency self-adaptive model to restore realistic features of the latent image. The restoration is mainly performed in the Fourier domain and two attention mechanisms are introduced to match the feature between Fourier and spatial domain. Our method applies a lightweight network, without requiring modification when the fields of view (FoV) changes. Considering the manufacturing deviations of a specific camera, we first pre-train a simulation-based model, then finetune it with additional manufacturing error, which greatly decreases the time and computational overhead consumption in implementation. Extensive results verify the promising applications of our technique for being integrated with the existing post-processing systems.

@article{Lin_2022_OE, author = {Lin, Ting and Chen*, Shiqi and Feng, Huajun and Xu, Zhihai and Li, Qi and Chen, Yueting}, journal = {Opt. Express}, keywords = {All optical devices; Blind deconvolution; Image processing; Image quality; Optical design; Ray tracing}, number = {13}, pages = {23485--23498}, publisher = {Optica Publishing Group}, title = {Non-blind optical degradation correction via frequency self-adaptive and finetune tactics}, volume = {30}, month = jun, year = {2022}, } -

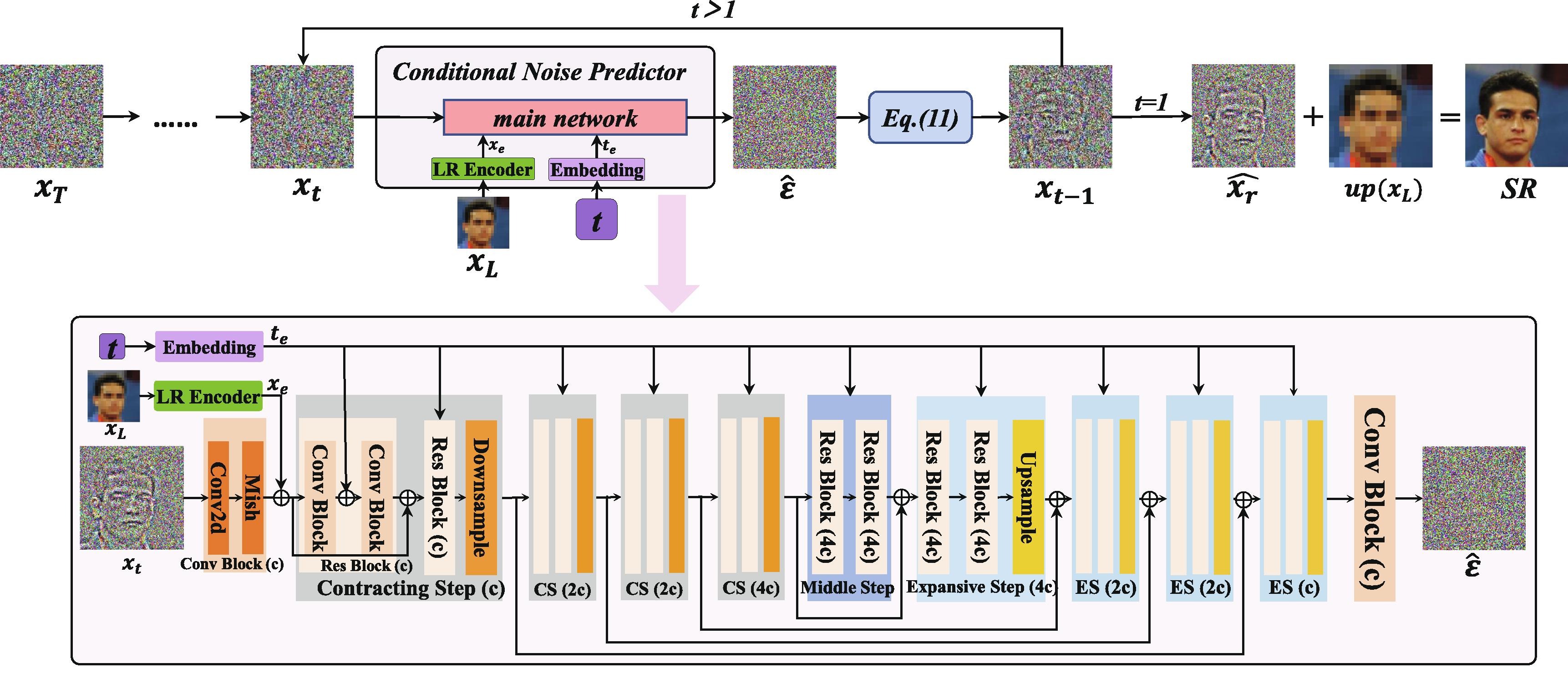

SRDiff: Single image super-resolution with diffusion probabilistic modelsHaoying Li, Yifan Yang, Shiqi Chen, and 5 more authorsNeurocomputing, Feb 2022

SRDiff: Single image super-resolution with diffusion probabilistic modelsHaoying Li, Yifan Yang, Shiqi Chen, and 5 more authorsNeurocomputing, Feb 2022Single image super-resolution (SISR) aims to reconstruct high-resolution (HR) images from given low-resolution (LR) images. It is an ill-posed problem because one LR image corresponds to multiple HR images. Recently, learning-based SISR methods have greatly outperformed traditional methods. However, PSNR-oriented, GAN-driven and flow-based methods suffer from over-smoothing, mode collapse and large model footprint issues, respectively. To solve these problems, we propose a novel SISR diffusion probabilistic model (SRDiff), which is the first diffusion-based model for SISR. SRDiff is optimized with a variant of the variational bound on the data likelihood. Through a Markov chain, it can provide diverse and realistic super-resolution (SR) predictions by gradually transforming Gaussian noise into a super-resolution image conditioned on an LR input. In addition, we introduce residual prediction to the whole framework to speed up model convergence. Our extensive experiments on facial and general benchmarks (CelebA and DIV2K datasets) show that (1) SRDiff can generate diverse SR results with rich details and achieve competitive performance against other state-of-the-art methods, when given only one LR input; (2) SRDiff is easy to train with a small footprint(The word “footprint” in this paper represents “model size” (number of model parameters).); (3) SRDiff can perform flexible image manipulation operations, including latent space interpolation and content fusion.

@article{Li_2022_NC, title = {SRDiff: Single image super-resolution with diffusion probabilistic models}, journal = {Neurocomputing}, volume = {479}, pages = {47-59}, month = feb, year = {2022}, issn = {0925-2312}, url = {https://www.sciencedirect.com/science/article/pii/S0925231222000522}, author = {Li, Haoying and Yang, Yifan and Chen, Shiqi and Chang, Meng and Feng, Huajun and Xu, Zhihai and Li, Qi and Chen, Yueting}, keywords = {Single image super-resolution, Diffusion probabilistic model, Diverse results, Deep learning}, } -

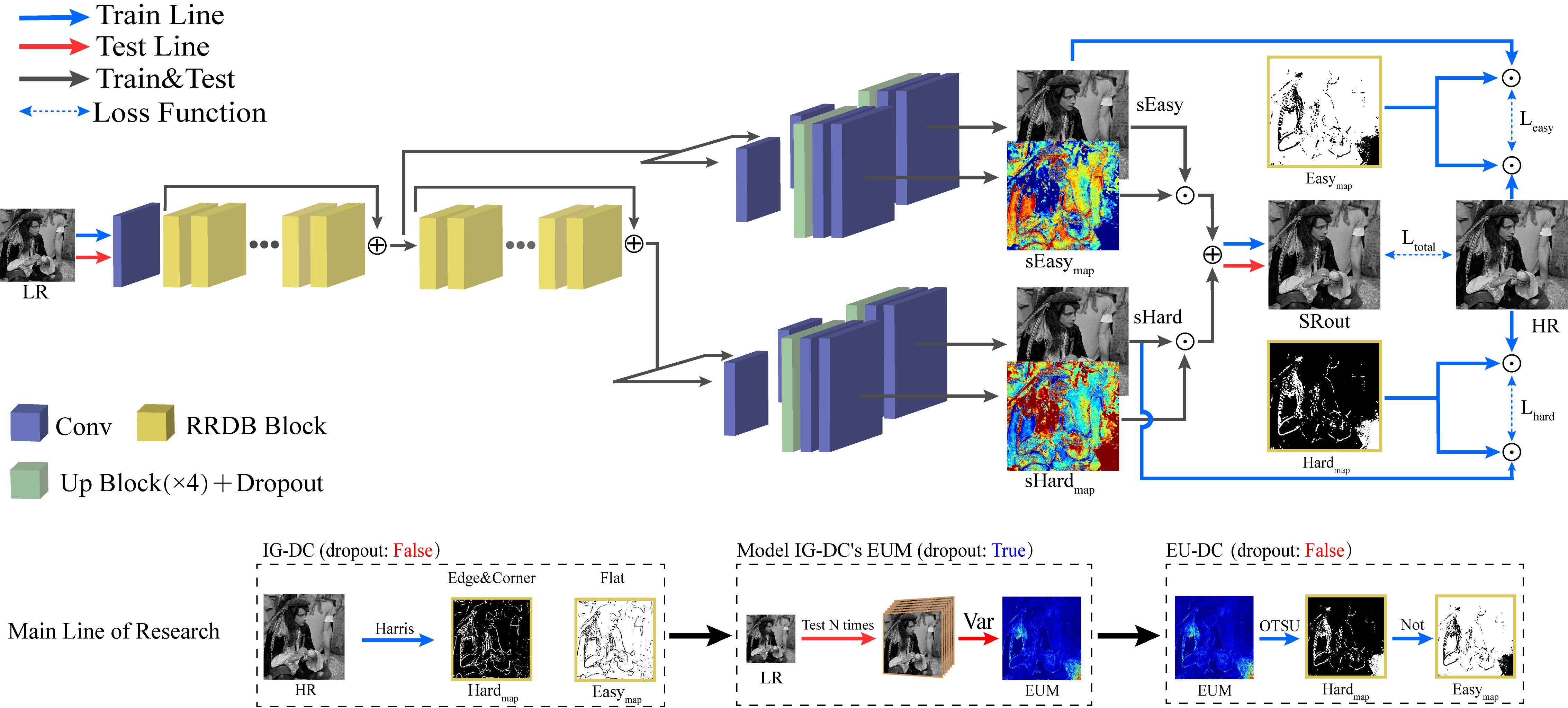

Epistemic-Uncertainty-Based Divide-and-Conquer Network for Single-Image Super-ResolutionJiaqi Yang, Shiqi Chen, Qi Li, and 3 more authorsElectronics, Nov 2022

Epistemic-Uncertainty-Based Divide-and-Conquer Network for Single-Image Super-ResolutionJiaqi Yang, Shiqi Chen, Qi Li, and 3 more authorsElectronics, Nov 2022The introduction of convolutional neural networks (CNNs) into single-image super-resolution (SISR) has resulted in remarkable performance in the last decade. There is a contradiction in SISR between indiscriminate processing and the different processing difficulties in different regions, leading to the need for locally differentiated processing of SR networks. In this paper, we propose an epistemic-uncertainty-based divide-and-conquer network (EU-DC) in order to address this problem. Firstly, we build an image-gradient-based divide-and-conquer network (IG-DC) that utilizes gradient-based division to separate degraded images into easy and hard processing regions. Secondly, we model the IG-DC’s epistemic uncertainty map (EUM) by using Monte Carlo dropout and, thus, measure the output confidence of the IG-DC. The lower the output confidence is, the more difficult the IG-DC is to process. The EUM-based division is generated by quantizing the EUM into two levels. Finally, the IG-DC is transformed into an EU-DC by substituting the gradient-based division with EUM-based division. Our extensive experiments demonstrate that the proposed EU-DC achieves better reconstruction performance than that of multiple state-of-the-art SISR methods in terms of both quantitative and visual quality.

2021

-

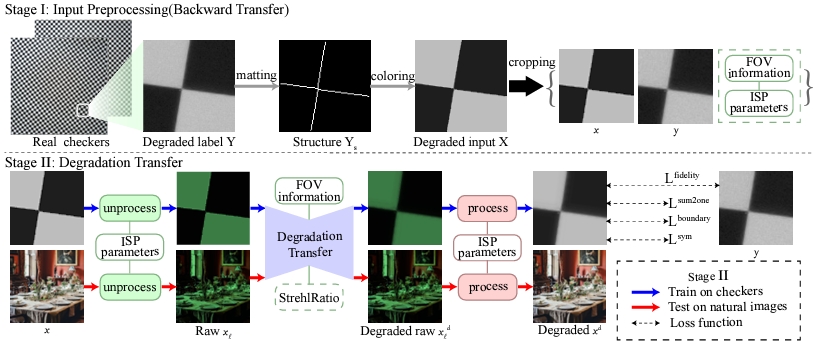

Extreme-Quality Computational Imaging via Degradation FrameworkShiqi Chen, Huajun Feng, Keming Gao, and 2 more authorsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Oct 2021

Extreme-Quality Computational Imaging via Degradation FrameworkShiqi Chen, Huajun Feng, Keming Gao, and 2 more authorsIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Oct 2021To meet the space limitation of optical elements, free-form surfaces or high-order aspherical lenses are adopted in mobile cameras to compress volume. However, the application of free-form surfaces also introduces the problem of image quality mutation. Existing model-based deconvolution methods are inefficient in dealing with the degradation that shows a wide range of spatial variants over regions. And the deep learning techniques in low-level and physics-based vision suffer from a lack of accurate data. To address this issue, we develop a degradation framework to estimate the spatially variant point spread functions (PSFs) of mobile cameras. When input extreme-quality digital images, the proposed framework generates degraded images sharing a common domain with real-world photographs. Supplied with the synthetic image pairs, we design a Field-Of-View shared kernel prediction network (FOV-KPN) to perform spatial-adaptive reconstruction on real degraded photos. Extensive experiments demonstrate that the proposed approach achieves extreme-quality computational imaging and outperforms the state-of-the-art methods. Furthermore, we illustrate that our technique can be integrated into existing postprocessing systems, resulting in significantly improved visual quality.

@inproceedings{Chen_2021_ICCV, author = {Chen, Shiqi and Feng, Huajun and Gao, Keming and Xu, Zhihai and Chen, Yueting}, title = {Extreme-Quality Computational Imaging via Degradation Framework}, booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}, month = oct, year = {2021}, pages = {2632-2641}, } -

Optical Aberrations Correction in Postprocessing Using Imaging SimulationShiqi Chen, Huajun Feng, Dexin Pan, and 3 more authorsACM Trans. Graph. (Post.Rec in SIGGRAPH 2022), Sep 2021

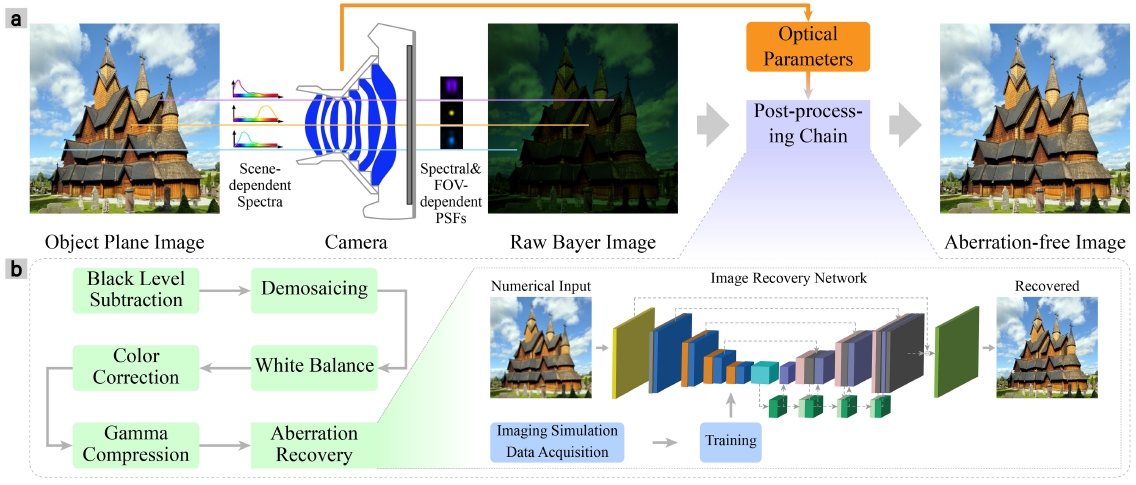

Optical Aberrations Correction in Postprocessing Using Imaging SimulationShiqi Chen, Huajun Feng, Dexin Pan, and 3 more authorsACM Trans. Graph. (Post.Rec in SIGGRAPH 2022), Sep 2021As the popularity of mobile photography continues to grow, considerable effort is being invested in the reconstruction of degraded images. Due to the spatial variation in optical aberrations, which cannot be avoided during the lens design process, recent commercial cameras have shifted some of these correction tasks from optical design to postprocessing systems. However, without engaging with the optical parameters, these systems only achieve limited correction for aberrations.In this work, we propose a practical method for recovering the degradation caused by optical aberrations. Specifically, we establish an imaging simulation system based on our proposed optical point spread function model. Given the optical parameters of the camera, it generates the imaging results of these specific devices. To perform the restoration, we design a spatial-adaptive network model on synthetic data pairs generated by the imaging simulation system, eliminating the overhead of capturing training data by a large amount of shooting and registration.Moreover, we comprehensively evaluate the proposed method in simulations and experimentally with a customized digital-single-lens-reflex camera lens and HUAWEI HONOR 20, respectively. The experiments demonstrate that our solution successfully removes spatially variant blur and color dispersion. When compared with the state-of-the-art deblur methods, the proposed approach achieves better results with a lower computational overhead. Moreover, the reconstruction technique does not introduce artificial texture and is convenient to transfer to current commercial cameras.

@article{Chen_2021_TOG, author = {Chen, Shiqi and Feng, Huajun and Pan, Dexin and Xu, Zhihai and Li, Qi and Chen, Yueting}, title = {Optical Aberrations Correction in Postprocessing Using Imaging Simulation}, year = {2021}, issue_date = {October 2021}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, volume = {40}, number = {5}, issn = {0730-0301}, url = {https://doi.org/10.1145/3474088}, journal = {ACM Trans. Graph. (Post.Rec in SIGGRAPH 2022)}, month = sep, articleno = {192}, numpages = {15}, keywords = {imaging simulation, deep-learning networks, image reconstruction, Optical aberrations}, }