Degradation Transfer

if the degradation on one image can transfer to another picture?

Abstract:

To meet the space limitation of optical elements, free-form surfaces or high-order aspherical lenses are adopted in mobile cameras to compress volume. However, the application of free-form surfaces also introduces the problem of image quality mutation. Existing model-based deconvolution methods are inefficient in dealing with the degradation that shows a wide range of spatial variants over regions. And the deep learning techniques in low-level and physics-based vision suffer from a lack of accurate data. To address this issue, we develop a degradation framework to estimate the spatially variant point spread functions (PSFs) of mobile cameras. When input extreme-quality digital images, the proposed framework generates degraded images sharing a common domain with real-world photographs. Supplied with the synthetic image pairs, we design a Field-Of-View shared kernel prediction network (FOV-KPN) to perform spatial-adaptive reconstruction on real degraded photos. Extensive experiments demonstrate that the proposed approach achieves extreme-quality computational imaging and outperforms the state-of-the-art methods. Furthermore, we illustrate that our technique can be integrated into existing postprocessing systems, resulting in significantly improved visual quality.

Background:

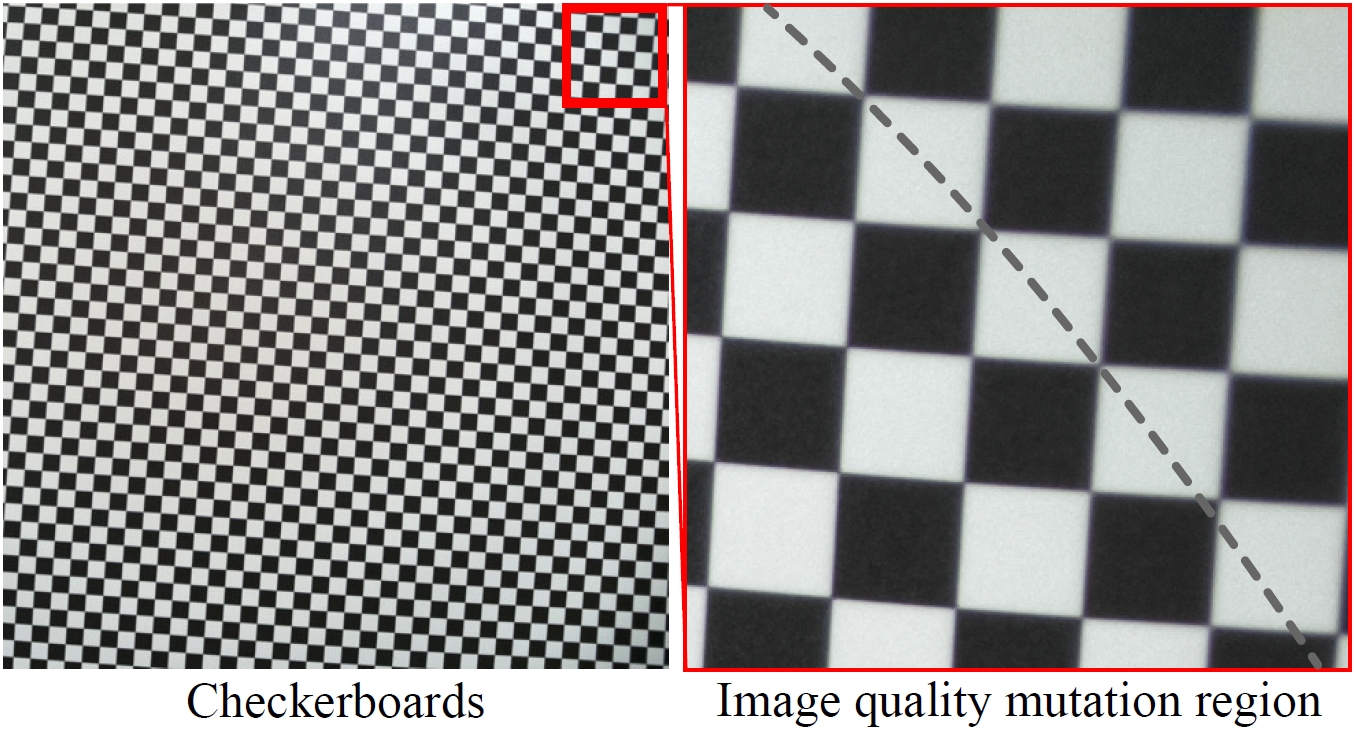

The image quality always change greatly in the edge of FoV, which is generally caused by the manufacturing error. Directly measuring these deviations is really difficult after the lens is mounted, so we consider transfer the degradation of one image to another uncorrupted photo. Below, you will see the image mutation in the edge of mobile phone camera.

Method:

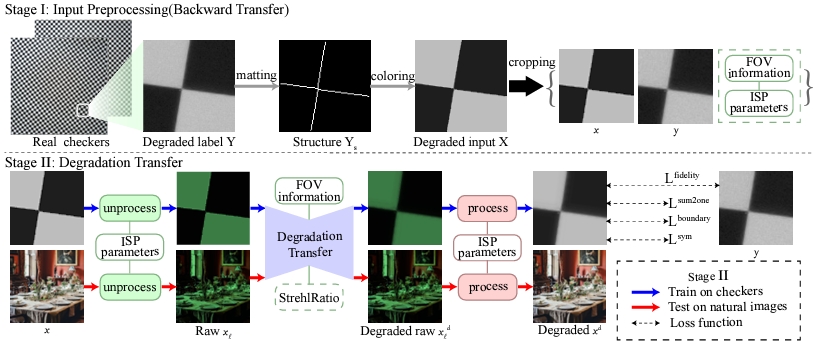

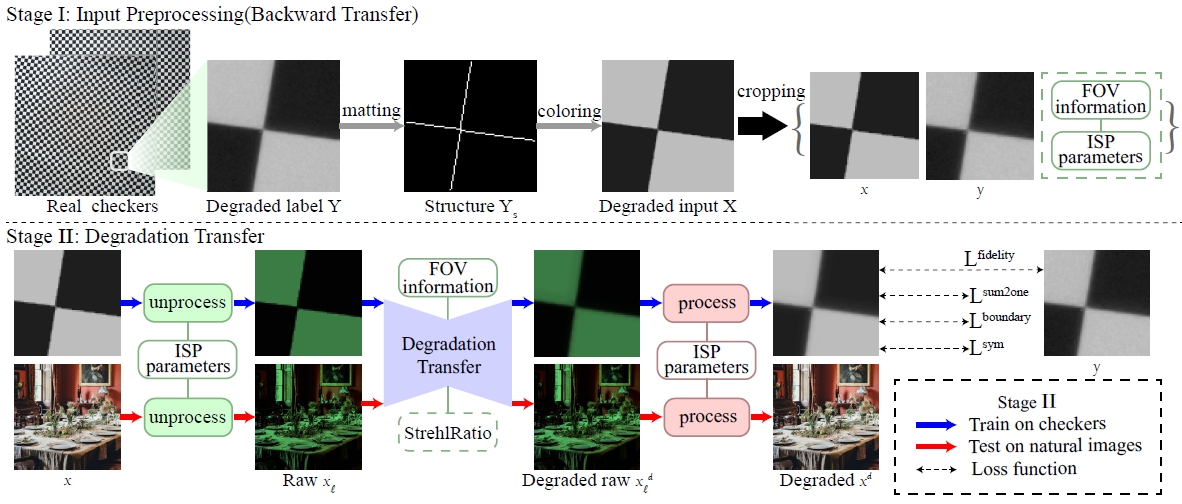

Our aim is to transfer the degradation of one image to another. To achieve this,

- we built the first deep-learning-based calibration to densely represent the optical degradation of deviated camera.

The degradation transfer used for representation is controlled by many constraints:

\[\mathcal{L} = \alpha \mathcal{L}_{fidelity} + \beta \mathcal{L}_{sum2one} + \gamma \mathcal{L}_{boundary} + \delta \mathcal{L}_{sym}\]here alpha, beta, gamma, delta are the weight coefficients of different loss functions.

\[\mathcal{L}_{fidelity}=||x^{d}-y||_{1}\] \[\mathcal{L}_{sum2one}=|1-\Sigma k_{i,j}|\] \[\mathcal{L}_{boundary}=\Sigma |k_{i,j} \cdot m_{i,j}|\] \[\mathcal{L}_{sym} = \Sigma_{i,j}(k(i,j)-\frac{1}{2}(k(i,j)+k_{sym}(-i, -j)))^{2}\]in this way, we force each PSF to be similar to its central symmetry counterpart. The unprocess and process pipeline is the same as the Imaging Simulation

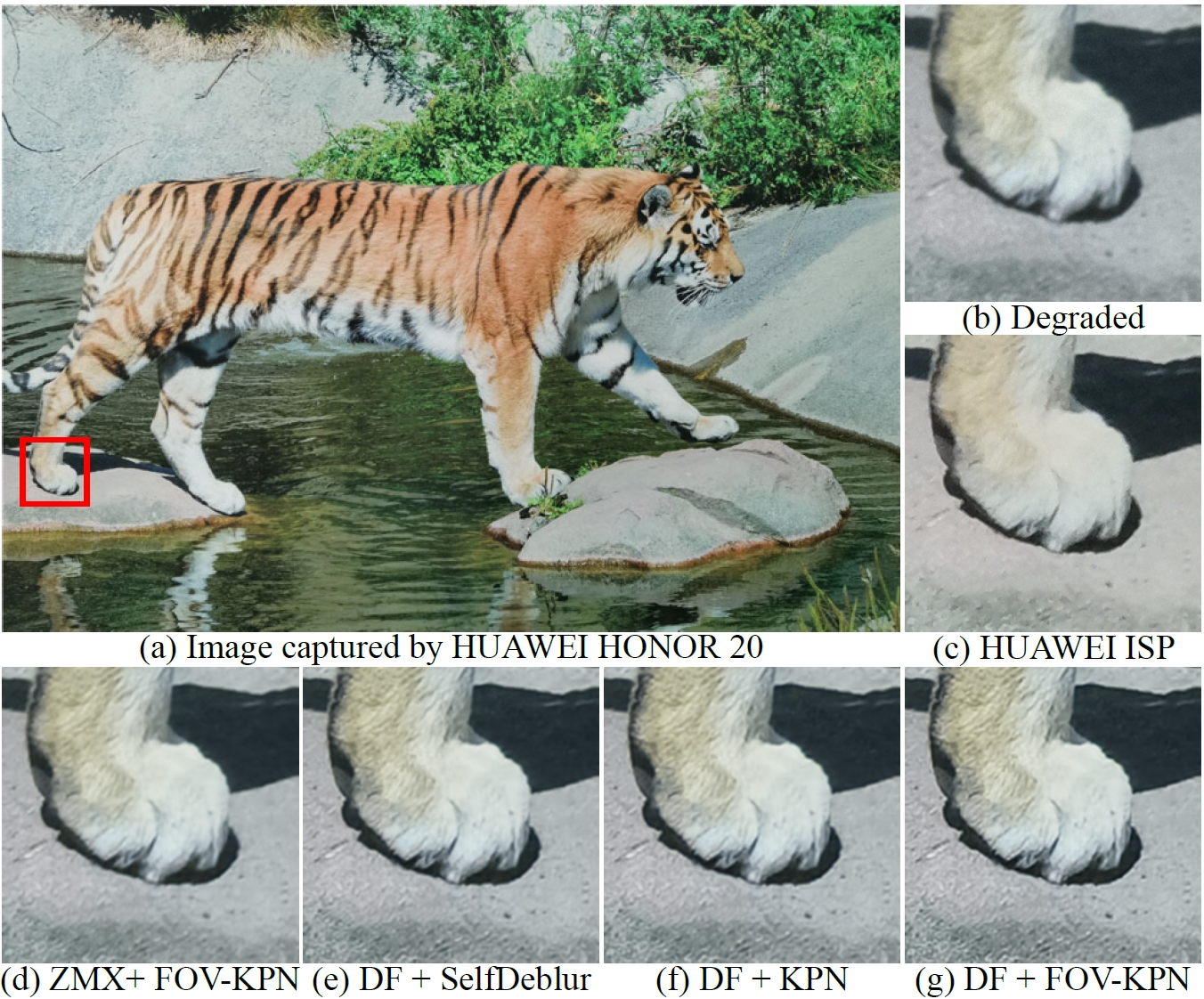

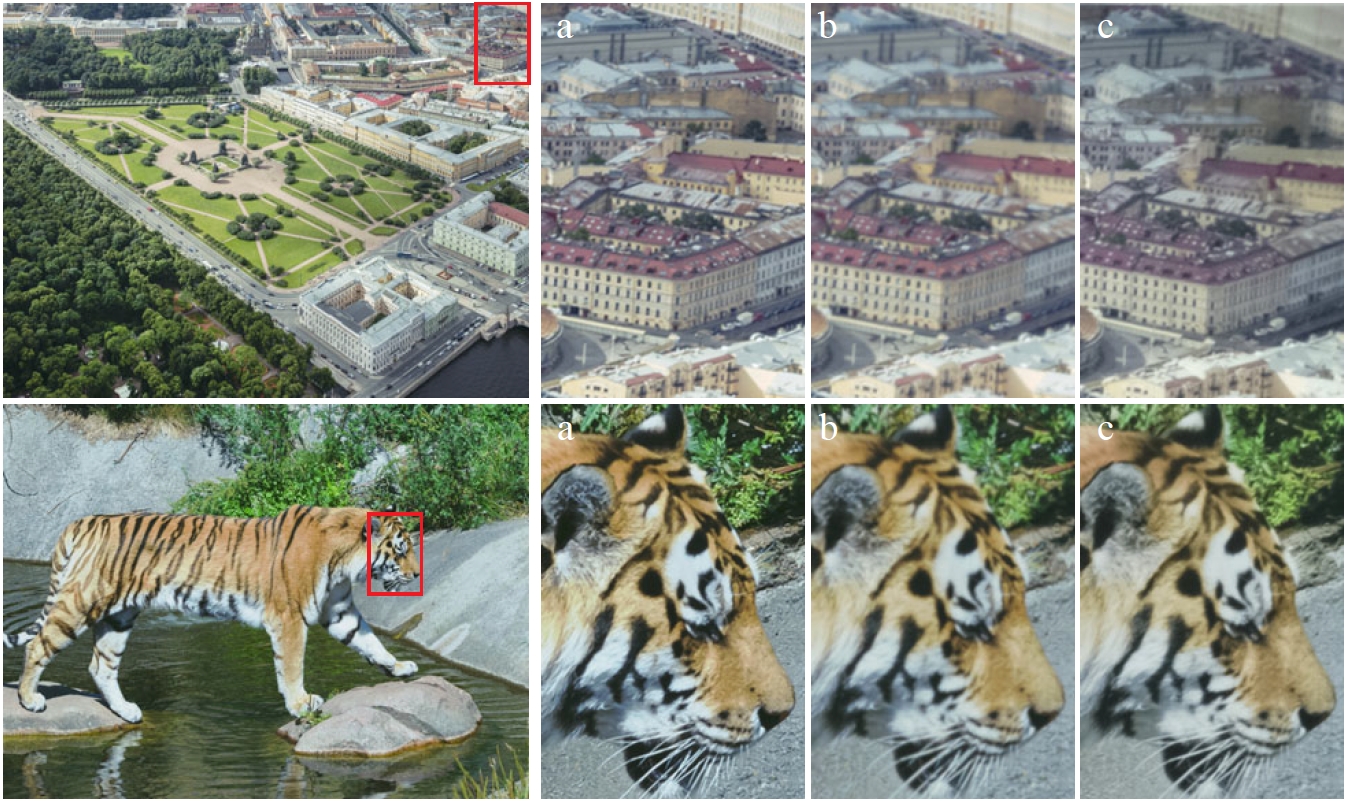

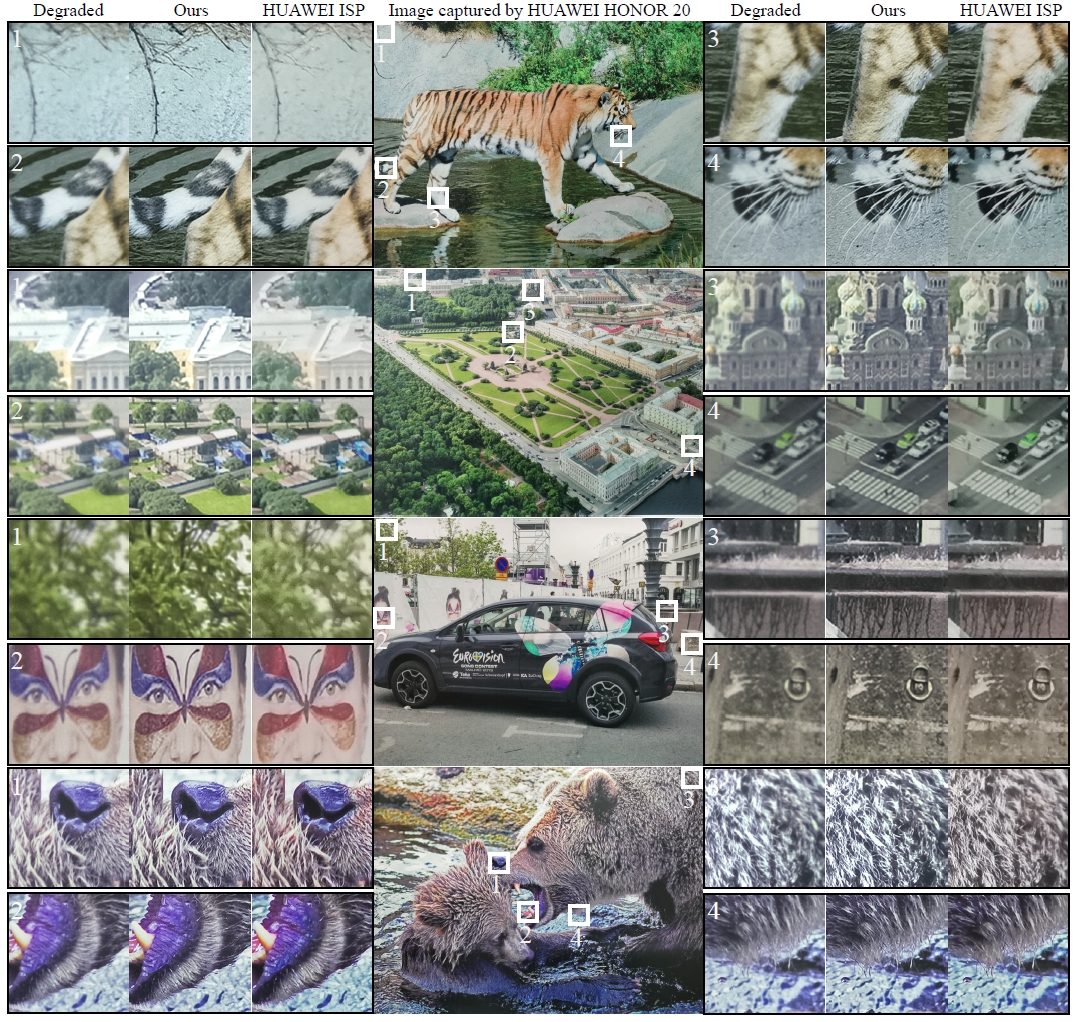

After training the degradation transfer model, we could use it to copy the behavior of degradation and to influence the clean image. The evaluations on natural photographs are shown as follows

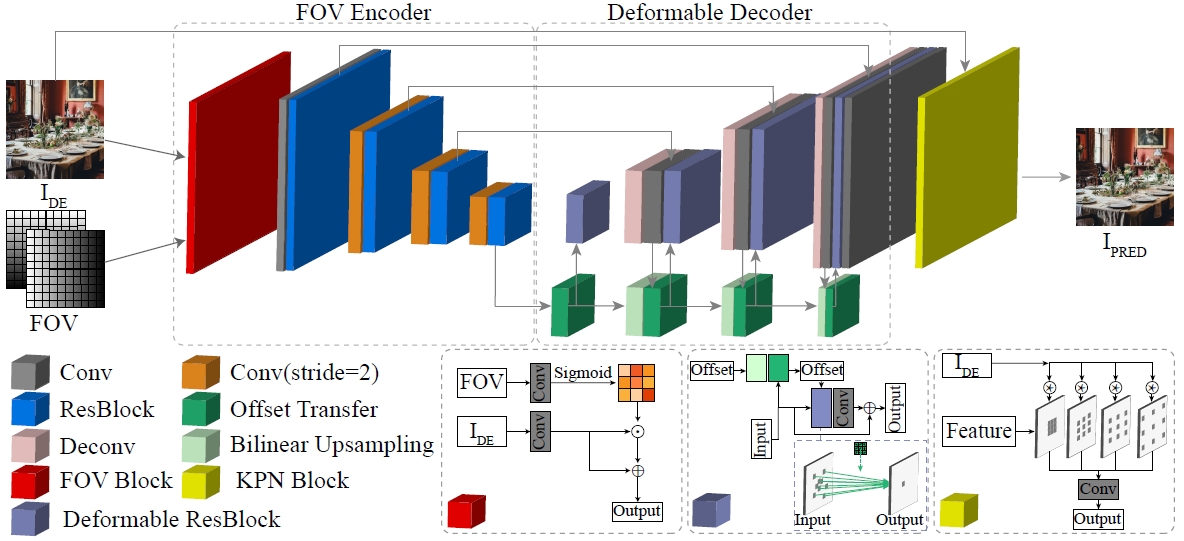

We construct a large dataset for each camera, and propose two deep learning models for correction. One model is blind and another is non-blind.

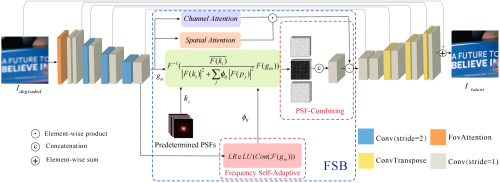

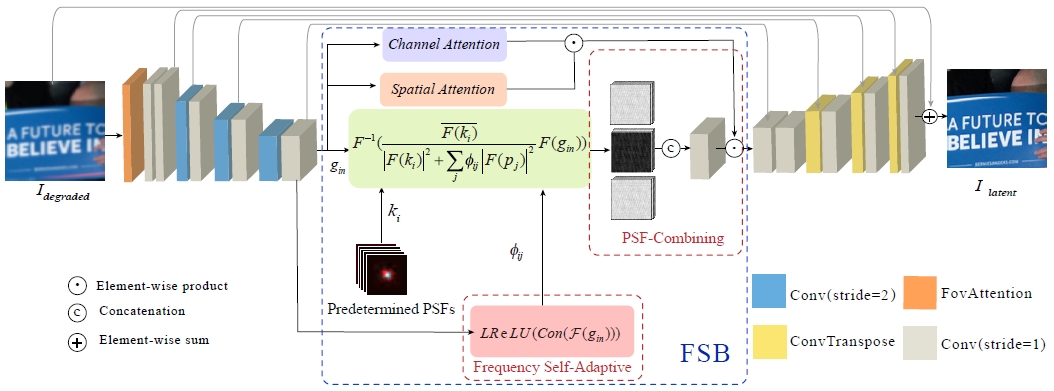

the key to perform deconvolution in latent space could be formulated as,

\[I_{latent}(x,y)=\Sigma_{i}^{m} \mathcal{F}^{-1}(\eta_{i} \cdot \frac{conj(\mathcal{F}(k_{i}))}{|\mathcal{F}(k_{i})|^{2}+\Sigma_{j}^{n} \phi_{ij} \cdot |\mathcal{F}(p_{j})|^{2}} \cdot \mathcal{F}(I_{degraded}(x,y)))\]Experiments:

We show some visualization here, for detailed comparisons and illustrations, please refer to our paper.

Our Main Observation:

- The degradation of one image could be transferred. In this way, we could realize more accurate data-pair generation. As mentioned before, we constructed a degradation framework for estimating the spatially variant PSF of a specific camera, including but not limited to the device that shows image quality mutation. The proposed framework generates authentic imaging results that resemble real-world photographs, where a lot of shooting, registration, and color correction are needless.

- The imaging quality of low-end mobile terminal has the potential to surpass high-end DSLR.

Limitations:

- The representation only work for one specific camera. If you want to transplant the transfer to another device, retraining is needed.

- Overfitting will introduce some wried texture in some high resolution natural images.