Imaging Simulation

how to synthetic the imaging results for an optical system?

Abstract:

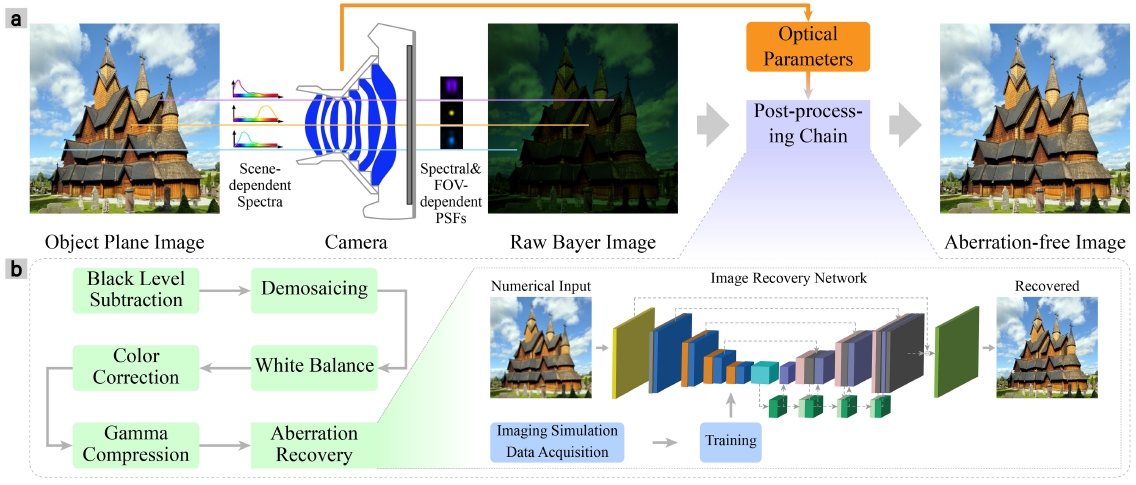

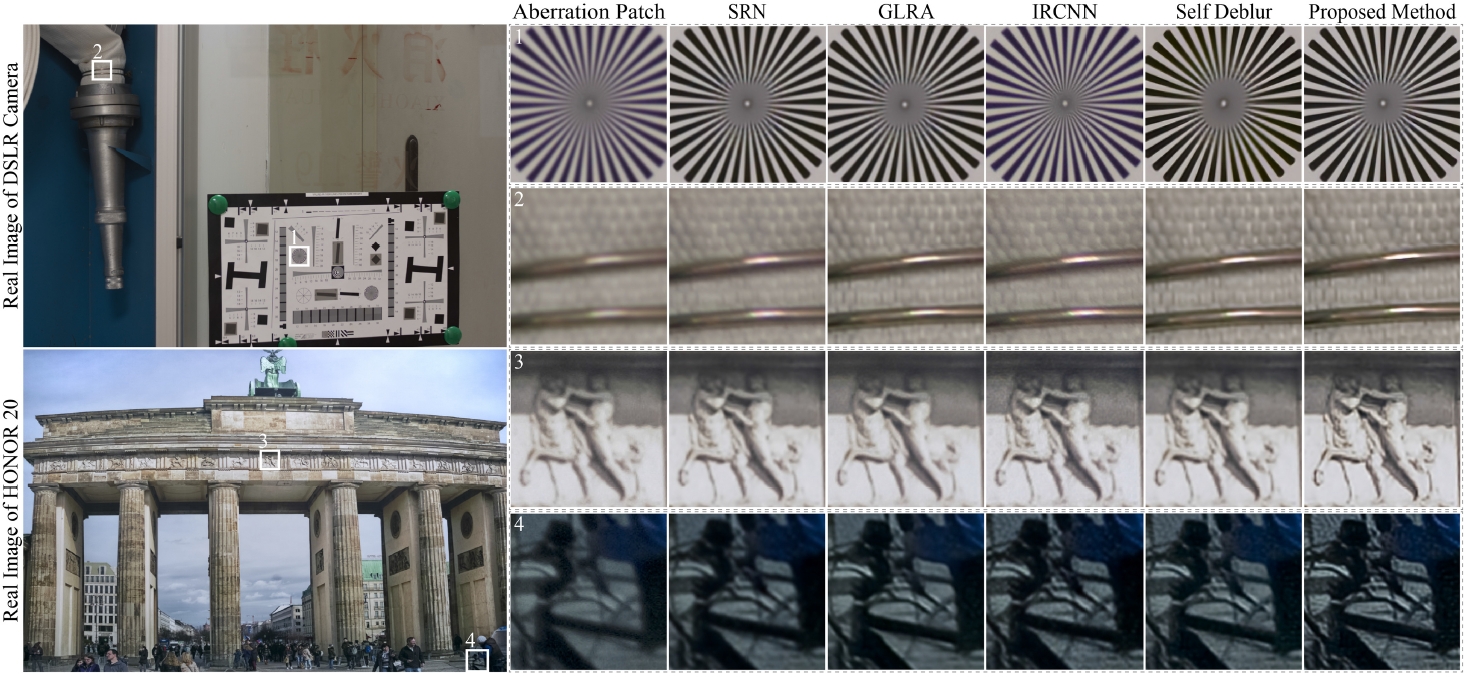

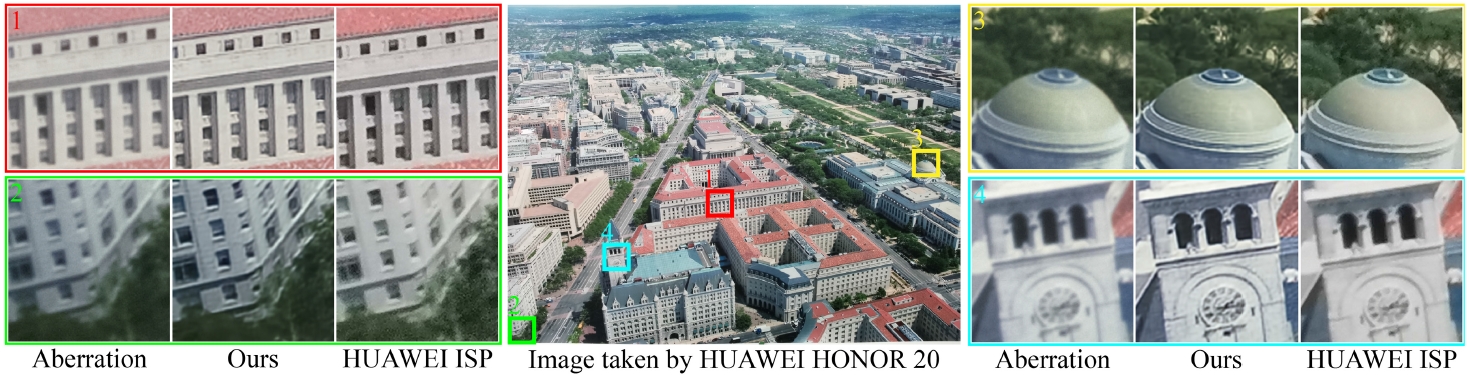

As the popularity of mobile photography continues to grow, considerable effort is being invested in the reconstruction of degraded images. Due to the spatial variation in optical aberrations, which cannot be avoided during the lens design process, recent commercial cameras have shifted some of these correction tasks from optical design to postprocessing systems. However, without engaging with the optical parameters, these systems only achieve limited correction for aberrations.In this work, we propose a practical method for recovering the degradation caused by optical aberrations. Specifically, we establish an imaging simulation system based on our proposed optical point spread function model. Given the optical parameters of the camera, it generates the imaging results of these specific devices. To perform the restoration, we design a spatial-adaptive network model on synthetic data pairs generated by the imaging simulation system, eliminating the overhead of capturing training data by a large amount of shooting and registration.Moreover, we comprehensively evaluate the proposed method in simulations and experimentally with a customized digital-single-lens-reflex camera lens and HUAWEI HONOR 20, respectively. The experiments demonstrate that our solution successfully removes spatially variant blur and color dispersion. When compared with the state-of-the-art deblur methods, the proposed approach achieves better results with a lower computational overhead. Moreover, the reconstruction technique does not introduce artificial texture and is convenient to transfer to current commercial cameras.

Background:

Different optical system shows different aberration performance. Our aims is to find a general way to correct the various degradation carried out by the optical aberration.

Method:

The goal of our work is to propose a practical method for recovering the degradation caused by optical aberrations. To achieve this,

-

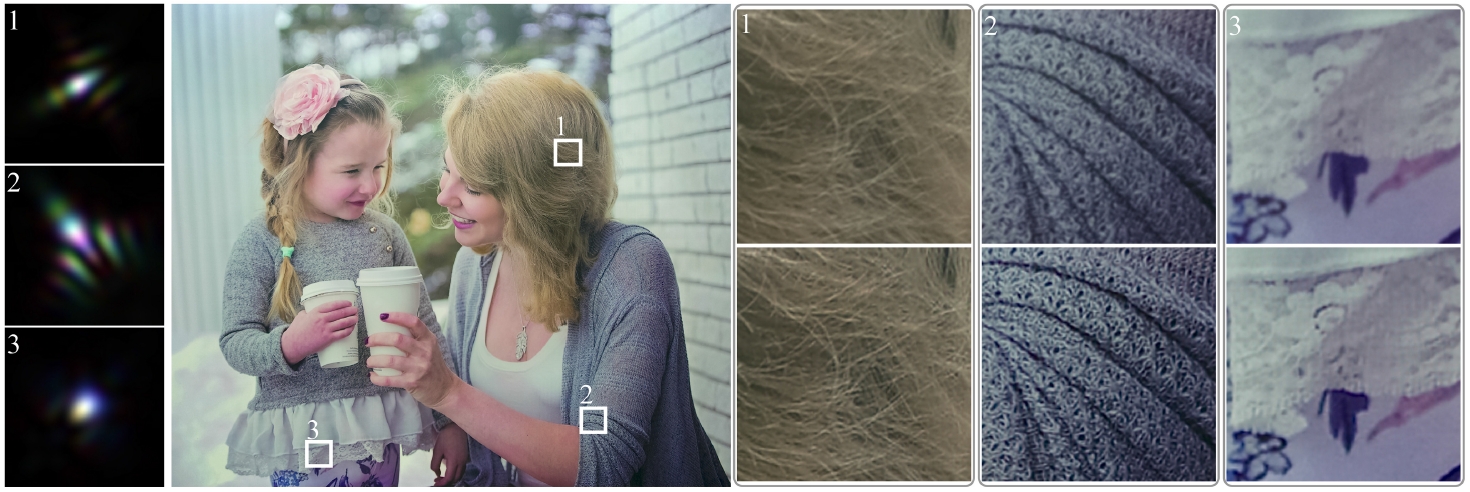

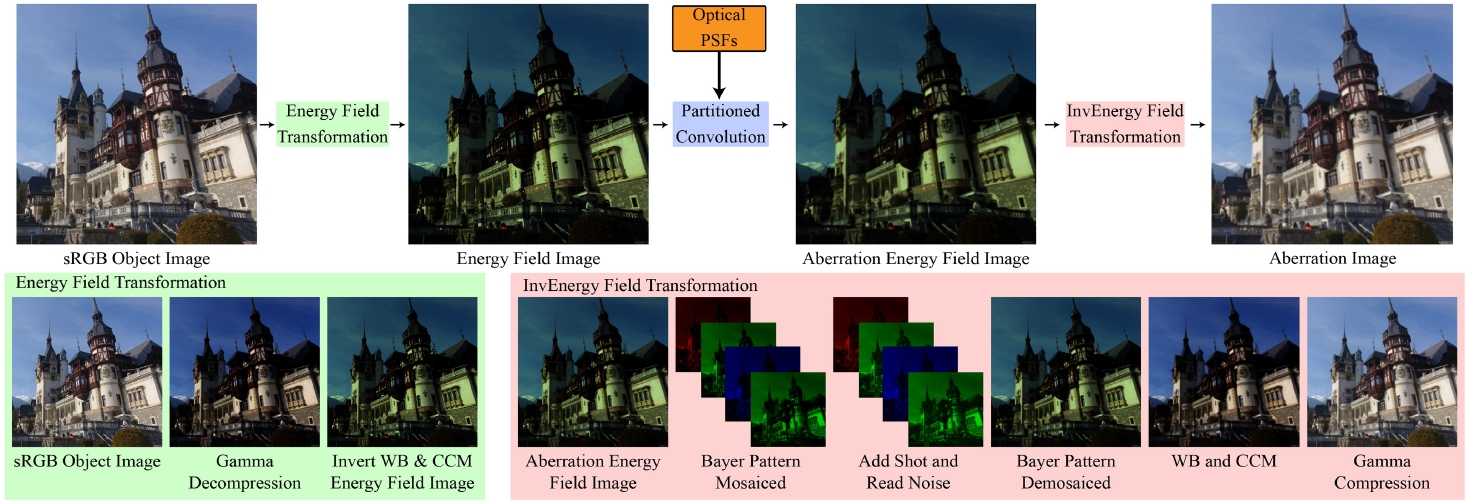

we first develop an imaging simulation system for dataset generation, which simulates the imaging results of a specific camera and generates a large training corpus for deep learning methods.

-

then, to eliminate the blurring, displacement, and chromatic aberrations caused by lens design, we design a spatially adaptive network architecture and insert it into the postprocessing chain.

In Imaging Simulation, we

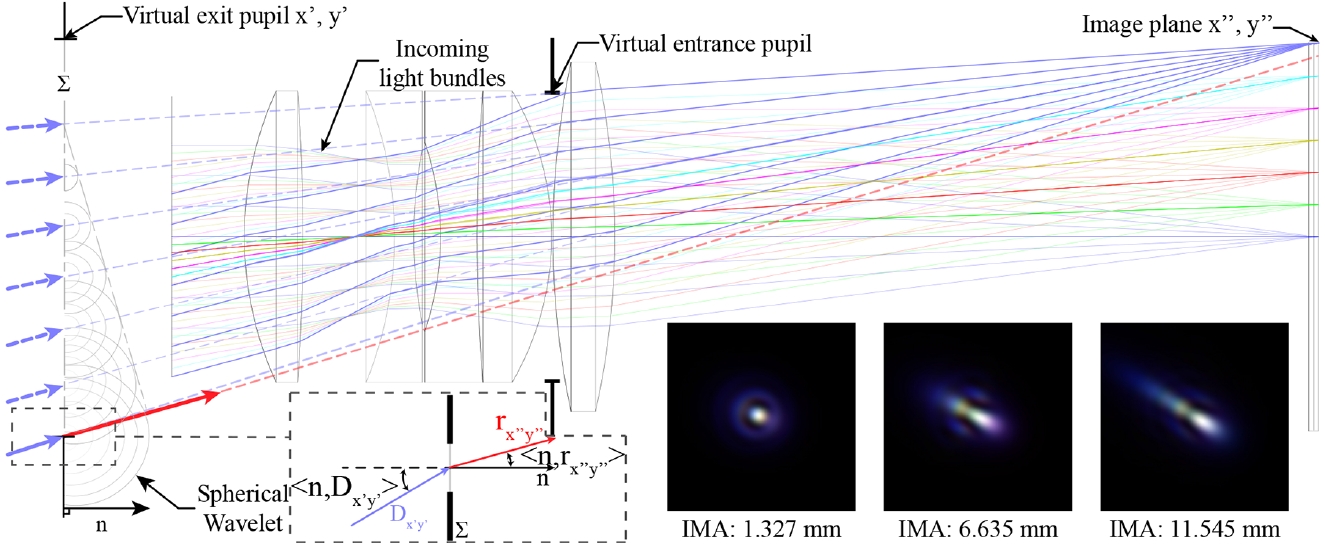

- Built the Optical PSF Model based on ray tracing and coherent superposition, considering the geometric propagation and wave properties of light in the meanwhile.

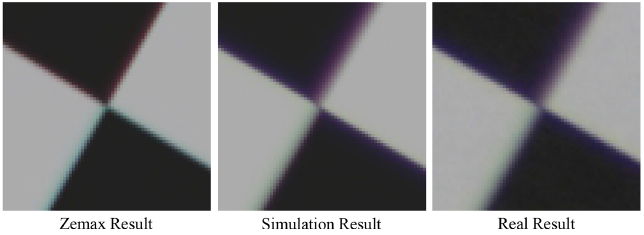

- Engaging with the invertible ISP pipeline, constructed the imaging simulation framework to accurately synthetic the performance of optical aberration, which is more reliable than the commercial optical design program (e.g., Zemax) and other SOTA algorithms.

In Aberration Correction, we

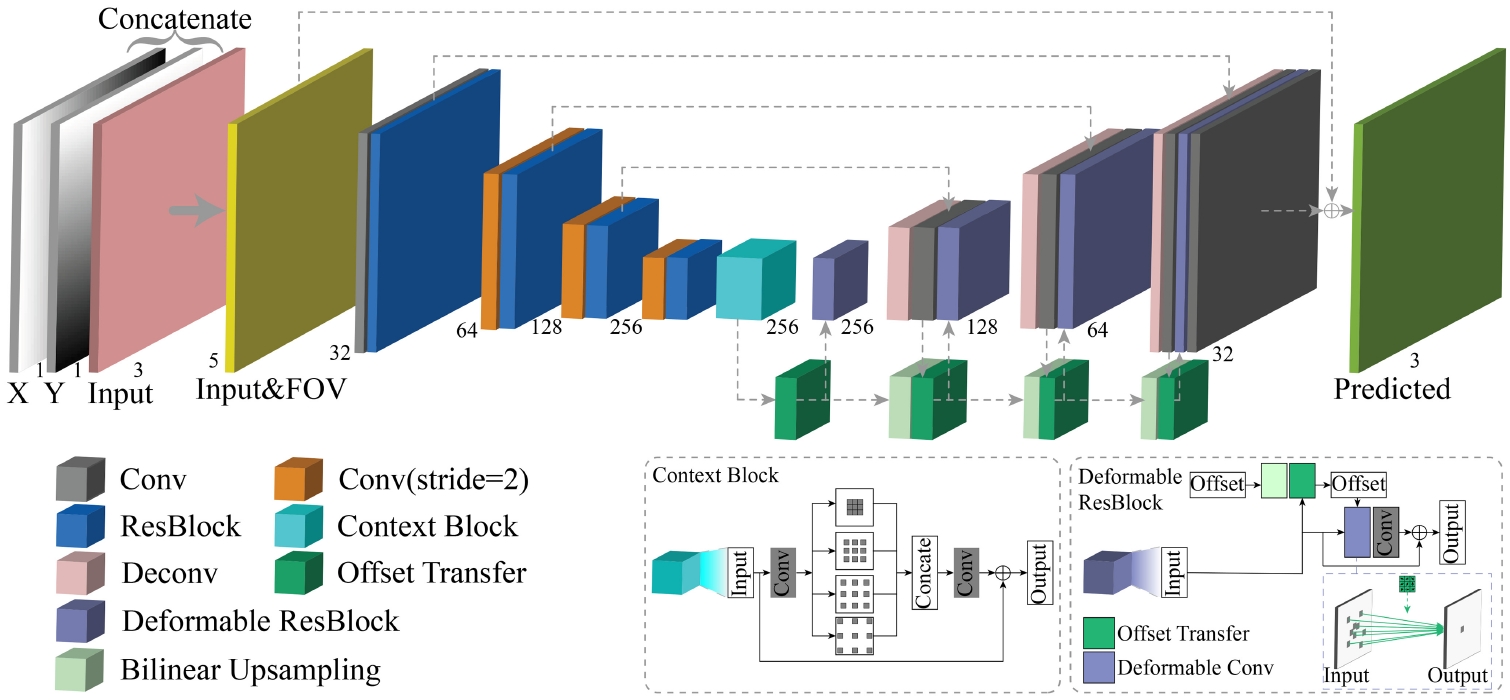

- Designed a novel spatial-adaptive CNN architecture, which introduces FOV input, deformable ResBlock, and context block to adapt to spatially variant degradation.

Experiments:

Only trained with synthetic data, the proposed deep-learning method is validated to realize excellent restoration in the natural scene. First, we evaluate the performance of the proposed model:

In the implementation,

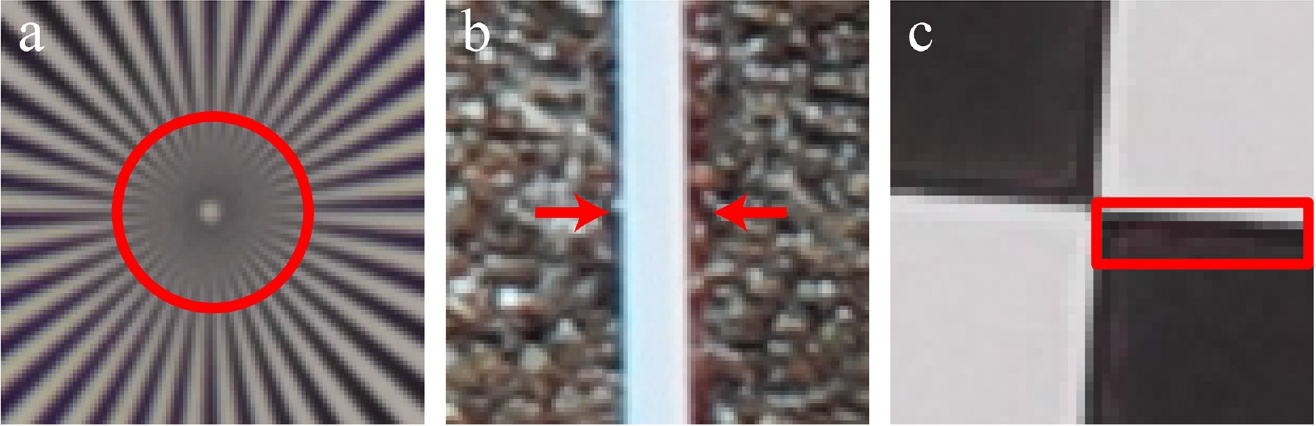

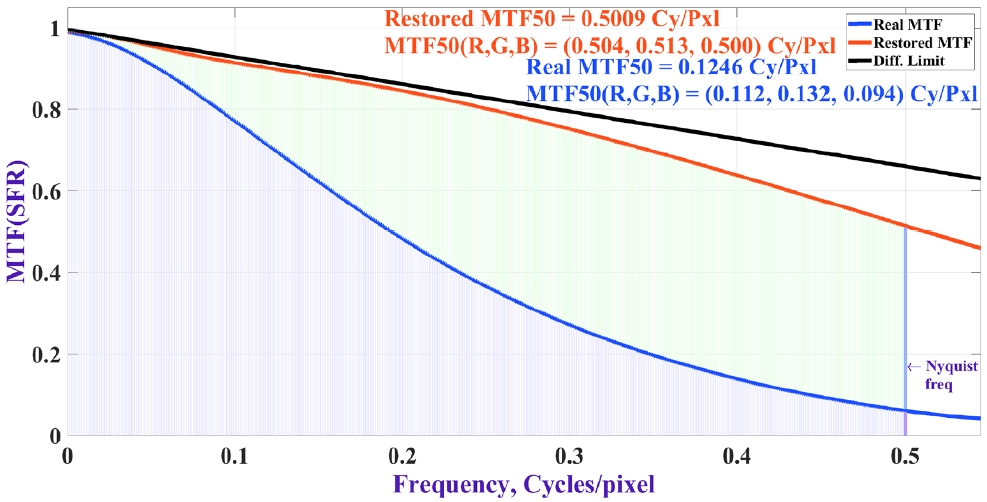

Where is the limit of the correction? We use MTF evaluations to answer this question.

Our Main Observation:

When the forward simulation is comprehensive, the imaging results of a camera could be accurately predicted. The PSF calculation and the energy transform pipeline are of equal importance. In this way, only trained with synthetic data, the proposed deep-learning method is validated to realize excellent restoration in the natural scene.